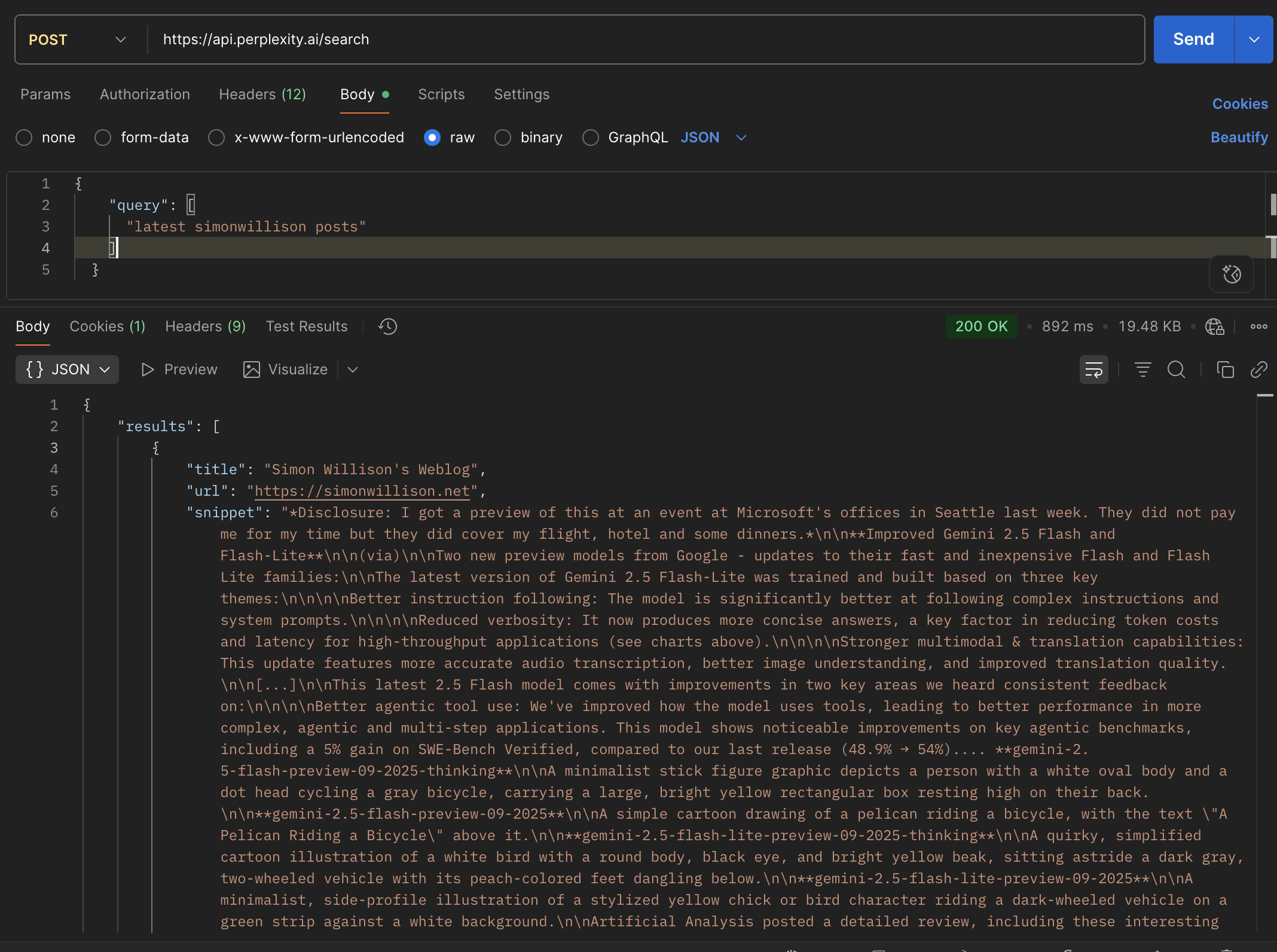

Perplexity Search API

We can now perform real-time search using Perplexity Search API similar to how Exa.ai or Brave search works.

If you have Perplexity Pro then you get $5/mo credits which you can use it for this API as well.

How to get started?

First thing, you need to get a new API key - If you have old API key then it does not work with search API, so make sure to create a new one.

curl --location 'https://api.perplexity.ai/search' \

--header 'Authorization: Bearer $YOUR_API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"query": [

"latest simonwillison posts"

]

}'

Response

{

"results": [

{

"title": "Simon Willison's Weblog",

"url": "https://simonwillison.net",

"snippet": "*Disclosure: I got a preview of this at an event at Microsoft's offices in Seattle last week. They did not pay me for my time but they did cover my flight, hotel and some dinners.*\n\n**Improved Gemini 2.5 Flash and Flash-Lite**\n\n(via)\n\nTwo new preview models from Google - updates to their fast and inexpensive Flash and Flash Lite families:\n\nThe latest version of Gemini 2.5 Flash-Lite was trained and built based on three key themes:\n\n\n\nBetter instruction following: The model is significantly better at following complex instructions and system prompts.\n\n\n\nReduced verbosity: It now produces more concise answers, a key factor in reducing token costs and latency for high-throughput applications (see charts above).\n\n\n\nStronger multimodal & translation capabilities: This update features more accurate audio transcription, better image understanding, and improved translation quality.\n\n[...]\n\nThis latest 2.5 Flash model comes with improvements in two key areas we heard consistent feedback on:\n\n\n\nBetter agentic tool use: We've improved how the model uses tools, leading to better performance in more complex, agentic and multi-step applications. This model shows noticeable improvements on key agentic benchmarks, including a 5% gain on SWE-Bench Verified, compared to our last release (48.9% → 54%).... **gemini-2.5-flash-preview-09-2025-thinking**\n\nA minimalist stick figure graphic depicts a person with a white oval body and a dot head cycling a gray bicycle, carrying a large, bright yellow rectangular box resting high on their back.\n\n**gemini-2.5-flash-preview-09-2025**\n\nA simple cartoon drawing of a pelican riding a bicycle, with the text \"A Pelican Riding a Bicycle\" above it.\n\n**gemini-2.5-flash-lite-preview-09-2025-thinking**\n\nA quirky, simplified cartoon illustration of a white bird with a round body, black eye, and bright yellow beak, sitting astride a dark gray, two-wheeled vehicle with its peach-colored feet dangling below.\n\n**gemini-2.5-flash-lite-preview-09-2025**\n\nA minimalist, side-profile illustration of a stylized yellow chick or bird character riding a dark-wheeled vehicle on a green strip against a white background.\n\nArtificial Analysis posted a detailed review, including these interesting notes about reasoning efficiency and speed:\n\n- In reasoning mode, Gemini 2.5 Flash and Flash-Lite Preview 09-2025 are more token-efficient, using fewer output tokens than their predecessors to run the Artificial Analysis Intelligence Index. Gemini 2.5 Flash-Lite Preview 09-2025 uses 50% fewer output tokens than its predecessor, while Gemini 2.5 Flash Preview 09-2025 uses 24% fewer output tokens.\n\n- Google Gemini 2.5 Flash-Lite Preview 09-2025 (Reasoning) is ~40% faster than the prior July release, delivering ~887 output tokens/s on Google AI Studio in our API endpoint performance benchmarking. This makes the new Gemini 2.5 Flash-Lite the fastest proprietary model we have benchmarked on the Artificial Analysis website... If you hide the system prompt and tool descriptions for your LLM agent, what you're actually doing is deliberately hiding the most useful documentation describing your service from your most sophisticated users!... **Qwen3-VL: Sharper Vision, Deeper Thought, Broader Action**\n\n(via)\n\nI've been looking forward to this. Qwen 2.5 VL is one of the best available open weight vision LLMs, so I had high hopes for Qwen 3's vision models.\n\nFirstly, we are open-sourcing the flagship model of this series: Qwen3-VL-235B-A22B, available in both Instruct and Thinking versions. The Instruct version matches or even exceeds Gemini 2.5 Pro in major visual perception benchmarks. The Thinking version achieves state-of-the-art results across many multimodal reasoning benchmarks.\n\nBold claims against Gemini 2.5 Pro, which are supported by a flurry of self-reported benchmarks.\n\nThis initial model is\n\n*enormous*. On Hugging Face both Qwen3-VL-235B-A22B-Instruct and Qwen3-VL-235B-A22B-Thinking are 235B parameters and weigh 471 GB. Not something I'm going to be able to run on my 64GB Mac!\n\nThe Qwen 2.5 VL family included models at 72B, 32B, 7B and 3B sizes. Given the rate Qwen are shipping models at the moment I wouldn't be surprised to see smaller Qwen 3 VL models show up in just the next few days.... Also from Qwen today, three new API-only closed-weight models: upgraded Qwen 3 Coder, Qwen3-LiveTranslate-Flash (real-time multimodal interpretation), and Qwen3-Max, their new trillion parameter flagship model, which they describe as their \"largest and most capable model to date\".\n\nPlus Qwen3Guard, a \"safety moderation model series\" that looks similar in purpose to Meta's Llama Guard. This one is open weights (Apache 2.0) and comes in 8B, 4B and 0.6B sizes on Hugging Face. There's more information in the QwenLM/Qwen3Guard GitHub repo.\n\n**Why AI systems might never be secure**.\n\nThe Economist have a new piece out about LLM security, with this headline and subtitle:\n\nWhy AI systems might never be secure\n\nA “lethal trifecta” of conditions opens them to abuse\n\nI talked with their AI Writer Alex Hern for this piece.\n\nThe gullibility of LLMs had been spotted before ChatGPT was even made public. In the summer of 2022, Mr Willison and others independently coined the term “prompt injection” to describe the behaviour, and real-world examples soon followed. In January 2024, for example, DPD, a logistics firm, chose to turn off its AI customer-service bot after customers realised it would follow their commands to reply with foul language.... That abuse was annoying rather than costly. But Mr Willison reckons it is only a matter of time before something expensive happens. As he puts it, “we’ve not yet had millions of dollars stolen because of this”. It may not be until such a heist occurs, he worries, that people start taking the risk seriously. The industry does not, however, seem to have got the message. Rather than locking down their systems in response to such examples, it is doing the opposite, by rolling out powerful new tools with the lethal trifecta built in from the start.\n\nThis is the clearest explanation yet I've seen of these problems in a mainstream publication. Fingers crossed relevant people with decision-making authority finally start taking this seriously!... *is*open weights, as Apache 2.0 Qwen3-Omni-30B-A3B-Instruct, Qwen/Qwen3-Omni-30B-A3B-Thinking, and Qwen3-Omni-30B-A3B-Captioner on HuggingFace. That Instruct model is 70.5GB so this should be relatively accessible for running on expensive home devices.\n\n- Qwen-Image-Edit-2509 is an updated version of their excellent Qwen-Image-Edit model which I first tried last month. Their blog post calls it \"the monthly iteration of Qwen-Image-Edit\" so I guess they're planning more frequent updates. The new model adds multi-image inputs. I used it via chat.qwen.ai to turn a photo of our dog into a dragon in the style of one of Natalie's ceramic pots.\n\nHere's the prompt I used, feeding in two separate images. Weirdly it used the edges of the landscape photo to fill in the gaps on the otherwise portrait output. It turned the chair seat into a bowl too!\n\n**CompileBench: Can AI Compile 22-year-old Code?**\n\n(via)\n\nInteresting new LLM benchmark from Piotr Grabowski and Piotr Migdał: how well can different models handle compilation challenges such as cross-compiling... Mistral quietly released two new models yesterday: Magistral Small 1.2 (Apache 2.0, 96.1 GB on Hugging Face) and Magistral Medium 1.2 (not open weights same as Mistral's other \"medium\" models.)\n\nDespite being described as \"minor updates\" to the Magistral 1.1 models these have one very notable improvement:\n\n- Multimodality: Now equipped with a vision encoder, these models handle both text and images seamlessly.\n\nMagistral is Mistral's reasoning model, so we now have a new reasoning vision LLM.\n\nThe other features from the tiny announcement on Twitter:\n\n- Performance Boost: 15% improvements on math and coding benchmarks such as AIME 24/25 and LiveCodeBench v5/v6.\n\n- Smarter Tool Use: Better tool usage with web search, code interpreter, and image generation.\n\n- Better Tone & Persona: Responses are clearer, more natural, and better formatted for you.\n\n**The Hidden Risk in Notion 3.0 AI Agents: Web Search Tool Abuse for Data Exfiltration**.\n\nAbi Raghuram reports that Notion 3.0, released yesterday, introduces new prompt injection data exfiltration vulnerabilities thanks to enabling lethal trifecta attacks.... ### Sept. 16, 2025\n\n**Announcing the 2025 PSF Board Election Results!**\n\nI'm happy to share that I've been re-elected for second term on the board of directors of the Python Software Foundation.\n\nJannis Leidel was also re-elected and Abigail Dogbe and Sheena O’Connell will be joining the board for the first time.",

"date": "2025-09-26",

"last_updated": "2025-09-26"

},

{

"title": "Series of posts - Simon Willison's Weblog",

"url": "https://simonwillison.net/series/",

"snippet": "## Series of posts\n\n### GPT-5\n\nMy posts following the launch of OpenAI's GPT-5 model.\n\n- GPT-5: Key characteristics, pricing and model card - Aug. 7, 2025, 5:36 p.m.\n\n- The surprise deprecation of GPT-4o for ChatGPT consumers - Aug. 8, 2025, 5:52 p.m.\n\n- GPT-5 Thinking in ChatGPT (aka Research Goblin) is shockingly good at search - Sept. 6, 2025, 7:31 p.m.\n\n- Recreating the Apollo AI adoption rate chart with GPT-5, Python and Pyodide - Sept. 9, 2025, 6:47 a.m.\n\n### How I blog\n\nPosts about this blog and how I approach writing online.\n\n- One year of blogging - June 12, 2003, 11:59 p.m.\n\n- Blogmarks - Nov. 24, 2003, 12:52 a.m.\n\n- 1000th Blogmark - Aug. 26, 2004, 12:30 a.m.\n\n- Implementing faceted search with Django and PostgreSQL - Oct. 5, 2017, 2:12 p.m.\n\n- One year of TILs - May 2, 2021, 6:01 p.m.\n\n- Twenty years of my blog - June 12, 2022, 10:59 p.m.\n\n- What to blog about - Nov. 6, 2022, 5:05 p.m.\n\n- Semi-automating a Substack newsletter with an Observable notebook - April 4, 2023, 5:55 p.m.\n\n- A homepage redesign for my blog's 22nd birthday - June 12, 2024, 7:59 p.m.\n\n- My approach to running a link blog - Dec. 22, 2024, 6:37 p.m.... ### Project\n\nInterviews with people who are building cool things.\n\n- Project: VERDAD - tracking misinformation in radio broadcasts using Gemini 1.5 - Nov. 7, 2024, 6:41 p.m.\n\n- Project: Civic Band - scraping and searching PDF meeting minutes from hundreds of municipalities - Nov. 16, 2024, 10:14 p.m.\n\n- Six short video demos of LLM and Datasette projects - Jan. 22, 2025, 2:09 a.m.\n\n### New features in sqlite-utils\n\nAny time I introduce a significant new feature in a release of my sqlite-utils package I write about it here.\n\n- sqlite-utils: a Python library and CLI tool for building SQLite databases - Feb. 25, 2019, 3:29 a.m.\n\n- Fun with binary data and SQLite - July 30, 2020, 11:22 p.m.\n\n- Executing advanced ALTER TABLE operations in SQLite - Sept. 23, 2020, 1 a.m.\n\n- Refactoring databases with sqlite-utils extract - Sept. 23, 2020, 4:02 p.m.\n\n- Joining CSV and JSON data with an in-memory SQLite database - June 19, 2021, 10:55 p.m.\n\n- Apply conversion functions to data in SQLite columns with the sqlite-utils CLI tool - Aug. 6, 2021, 6:05 a.m.\n\n- What's new in sqlite-utils 3.20 and 3.21: --lines, --text, --convert - Jan. 11, 2022, 6:19 p.m.\n\n- sqlite-utils now supports plugins - July 24, 2023, 5:06 p.m.... ### How it's trained\n\nInvestigating the training data behind different machine learning models.\n\n- Exploring the training data behind Stable Diffusion - Sept. 5, 2022, 12:18 a.m.\n\n- Exploring 10m scraped Shutterstock videos used to train Meta's Make-A-Video text-to-video model - Sept. 29, 2022, 7:31 p.m.\n\n- Exploring MusicCaps, the evaluation data released to accompany Google's MusicLM text-to-music model - Jan. 27, 2023, 9:34 p.m.\n\n- What's in the RedPajama-Data-1T LLM training set - April 17, 2023, 6:57 p.m.\n\n### Datasette Lite\n\nA distribution of Datasette that runs entirely in the browser, using WebAssembly and Pyodide.\n\n- Datasette Lite: a server-side Python web application running in a browser - May 4, 2022, 3:16 p.m.\n\n- Joining CSV files in your browser using Datasette Lite - June 20, 2022, 9:20 p.m.\n\n- Plugin support for Datasette Lite - Aug. 17, 2022, 6:20 p.m.\n\n- Analyzing ScotRail audio announcements with Datasette - from prototype to production - Aug. 21, 2022, 2:04 a.m.\n\n- Weeknotes: Datasette Lite, s3-credentials, shot-scraper, datasette-edit-templates and more - Sept. 16, 2022, 2:55 a.m.... ### My open source process\n\nArticles about the process I use for developing my open source projects.\n\n- Documentation unit tests - July 28, 2018, 3:59 p.m.\n\n- How to cheat at unit tests with pytest and Black - Feb. 11, 2020, 6:56 a.m.\n\n- Open source projects: consider running office hours - Feb. 19, 2021, 9:54 p.m.\n\n- How to build, test and publish an open source Python library - Nov. 4, 2021, 10:02 p.m.\n\n- How I build a feature - Jan. 12, 2022, 6:10 p.m.\n\n- Writing better release notes - Jan. 31, 2022, 8:13 p.m.\n\n- Software engineering practices - Oct. 1, 2022, 3:56 p.m.\n\n- Automating screenshots for the Datasette documentation using shot-scraper - Oct. 14, 2022, 11:44 p.m.\n\n- The Perfect Commit - Oct. 29, 2022, 8:41 p.m.\n\n- Coping strategies for the serial project hoarder - Nov. 26, 2022, 3:47 p.m.\n\n- Things I've learned about building CLI tools in Python - Sept. 30, 2023, 12:12 a.m.\n\n- Publish Python packages to PyPI with a python-lib cookiecutter template and GitHub Actions - Jan. 16, 2024, 9:59 p.m.\n\n- A selfish personal argument for releasing code as Open Source - Jan. 24, 2025, 9:46 p.m.... - Claude and ChatGPT for ad-hoc sidequests - March 22, 2024, 7:44 p.m.\n\n- Building and testing C extensions for SQLite with ChatGPT Code Interpreter - March 23, 2024, 5:50 p.m.\n\n- llm cmd undo last git commit - a new plugin for LLM - March 26, 2024, 3:37 p.m.\n\n- Running OCR against PDFs and images directly in your browser - March 30, 2024, 5:59 p.m.\n\n- Building files-to-prompt entirely using Claude 3 Opus - April 8, 2024, 8:40 p.m.\n\n- AI for Data Journalism: demonstrating what we can do with this stuff right now - April 17, 2024, 9:04 p.m.\n\n- Building search-based RAG using Claude, Datasette and Val Town - June 21, 2024, 8:44 p.m.\n\n- django-http-debug, a new Django app mostly written by Claude - Aug. 8, 2024, 3:26 p.m.\n\n- Building a tool showing how Gemini Pro can return bounding boxes for objects in images - Aug. 26, 2024, 4:55 a.m.... - Notes on using LLMs for code - Sept. 20, 2024, 3:10 a.m.\n\n- Video scraping: extracting JSON data from a 35 second screen capture for less than 1/10th of a cent - Oct. 17, 2024, 12:32 p.m.\n\n- Everything I built with Claude Artifacts this week - Oct. 21, 2024, 2:32 p.m.\n\n- Run a prompt to generate and execute jq programs using llm-jq - Oct. 27, 2024, 4:26 a.m.\n\n- Prompts.js - Dec. 7, 2024, 8:35 p.m.\n\n- Building Python tools with a one-shot prompt using uv run and Claude Projects - Dec. 19, 2024, 7 a.m.\n\n- Here's how I use LLMs to help me write code - March 11, 2025, 2:09 p.m.\n\n- Not all AI-assisted programming is vibe coding (but vibe coding rocks) - March 19, 2025, 5:57 p.m.\n\n- AI assisted search-based research actually works now - April 21, 2025, 12:57 p.m.... ### Prompt injection\n\nA class of security vulnerabilities in software built on top of Large Language Models. See also my [prompt-injection tag](https://simonwillison.net/tags/prompt-injection/).",

"date": "2025-09-09",

"last_updated": "2025-09-10"

},

{

"title": "simonw - Overview",

"url": "https://github.com/simonw",

"snippet": "# Simon Willison simonw\n\nSponsor\n\n10.2k followers · 141 following\n\n- Datasette\n- Half Moon Bay, California\n- 18:08 - 7h behind\n- https://simonwillison.net/\n- @simonw\n- @simon@fedi.simonwillison.net\n- @simonwillison.net\n\nsimonw/README.md\n\nCurrently working on Datasette, LLM and associated projects. Read my blog, subscribe to my newsletter, follow me on Mastodon or on Bluesky.... |### Recent releases datasette-alerts-discord 0.1.0a1 - 2025-08-19datasette-alerts 0.0.1a3 - 2025-08-19llm-gemini 0.25 - 2025-08-18datasette-demo-dbs 0.1.1 - 2025-08-14llm 0.27.1 - 2025-08-12llm-anthropic 0.18 - 2025-08-05datasette-queries 0.1.2 - 2025-07-22datasette-public 0.3a3 - 2025-07-22More recent releases|### On my blog The Summer of Johann: prompt injections as far as the eye can see - 2025-08-15Open weight LLMs exhibit inconsistent performance across providers - 2025-08-15LLM 0.27, the annotated release notes: GPT-5 and improved tool calling - 2025-08-11Qwen3-4B-Thinking: \"This is art - pelicans don't ride bikes!\" - 2025-08-10My Lethal Trifecta talk at the Bay Area AI Security Meetup - 2025-08-09The surprise deprecation of GPT-4o for ChatGPT consumers - 2025-08-08More on simonwillison.net|### TIL Running a gpt-oss eval suite against LM Studio on a Mac - 2025-08-17Configuring GitHub Codespaces using devcontainers - 2025-08-13Rate limiting by IP using Cloudflare's rate limiting rules - 2025-07-03Using Playwright MCP with Claude Code - 2025-07-01Converting ORF raw files to JPEG on macOS - 2025-06-26Publishing a Docker container for Microsoft Edit to the GitHub Container Registry - 2025-06-21More on til.simonwillison.net|\n|--|--|--|\n\n How this works... ## Pinned Loading\n1. datasette\n\n An open source multi-tool for exploring and publishing data\n2. sqlite-utils\n\n Python CLI utility and library for manipulating SQLite databases\n3. llm\n\n Access large language models from the command-line\n4. shot-scraper\n\n A command-line utility for taking automated screenshots of websites\n5. files-to-prompt\n\n Concatenate a directory full of files into a single prompt for use with LLMs\n6. s3-credentials\n\n A tool for creating credentials for accessing S3 buckets\n\n## 4,381 contributions in the last year\nSkip to contributions year list\n\n### Activity overview\n\nContributed to\nsimonw/llm, simonw/tools, simonw/llm-gemini and 269 other repositories\n\nLoading... ## Contribution activity\n\n### August 2025\n\nCreated 51 commits in 14 repositories\n- simonw/llm 13 commits\n- simonw/tools 5 commits\n- datasette/datasette-demo-dbs 5 commits\n- simonw/til 4 commits\n- simonw/codespaces 4 commits\n- simonw/simonwillisonblog 4 commits\n- simonw/codespaces-llm 3 commits\n- simonw/llm-prices 3 commits\n- ivanfioravanti/qwen-image-mps 3 commits\n- simonw/llm-gemini 2 commits\n- simonw/llm-anthropic 2 commits\n- openai/gpt-oss 1 commit\n- openai/harmony 1 commit\n- simonw/llm-openai-plugin 1 commit\n\nCreated 4 repositories\n- simonw/gpt-oss Python\n\n This contribution was made on Aug 17\n- simonw/codespaces-llm\n\n This contribution was made on Aug 13\n- simonw/qwen-image-mps Python\n\n This contribution was made on Aug 11\n- simonw/harmony Rust\n\n This contribution was made on Aug 8\n\n#### Created a pull request in ivanfioravanti/qwen-image-mps that received 6 comments... ### Use inline script dependencies\n\nRefs:\n\n#2 I have not yet fully tested this, because I ran out of hard disk space trying to download the model! It does at least get as far as the …\n\n+24 −19 lines changed • 6 comments\n\nOpened 2 other pull requests in 2 repositories\n\nopenai/gpt-oss 1 merged\n\n- Fix for bug where / in model name causes evals to fail\n\n This contribution was made on Aug 17\n\nopenai/harmony 1 merged\n\n- Add 'DeveloperContent' to __all__\n\n This contribution was made on Aug 8\n\nReviewed 1 pull request in 1 repository\n\nsimonw/llm 1 pull request\n\n- Toolbox.add_tool() mechanism\n\n This contribution was made on Aug 11\n\n#### Created an issue in simonw/llm that received 8 comments... ### Release 0.27 with GPT-5 and various tool enhancements\n\nBig release: 0.26...ef3192b has 32 commits already.\n\n8\ncomments\n\nOpened 15 other issues in 9 repositories\n\nsimonw/llm 6 closed\n\n- LLM chat prompts are duplicated when logged to the database if a template is used\n\n This contribution was made on Aug 12\n- llm chat -t template doesn't correctly load tools\n\n This contribution was made on Aug 12\n- Misleading error message if Toolbox is missing\n\n This contribution was made on Aug 11\n- llm -m gpt-5 -o reasoning_effort minimal --save gm saves bad YAML\n\n This contribution was made on Aug 11\n- GPT-5\n\n This contribution was made on Aug 7\n- Ensure all extra OpenAI models keys are documented\n\n This contribution was made on Aug 3\n\ndatasette/datasette-demo-dbs 2 closed\n\n- On subsequent startups does not spot the databases\n\n This contribution was made on Aug 14\n- Initial plugin design\n\n This contribution was made on Aug 14\n\nencode/httpx 1 open\n\n- Mysterious 1.0.dev2 pre-release broke my stuff\n\n This contribution was made on Aug 20... openai/gpt-oss 1 open\n\n- Evals fail for models with / in the model ID\n\n This contribution was made on Aug 17\n\nsimonw/datasette 1 open\n\n- Document that async def startup() hook functions actually do work\n\n This contribution was made on Aug 14\n\ntonybaloney/llm-github-models 1 closed\n\n- Suggestion: use GITHUB_TOKEN if it is available automatically\n\n This contribution was made on Aug 12\n\nivanfioravanti/qwen-image-mps 1 closed\n\n- Suggestion: use inline script dependencies instead of requirements.txt\n\n This contribution was made on Aug 11\n\nsimonw/llm-openai-plugin 1 open\n\n- GPT-5\n\n This contribution was made on Aug 7\n\nsimonw/llm-anthropic 1 open\n\n- Claude Opus 4.1\n\n This contribution was made on Aug 5\n\nStarted 1 discussion in 1 repository\n\nmenloresearch/jan\n\n- Can't get the mcp-run-python MCP to work\n\n This contribution was made on Aug 12\n\n49 contributions in private repositories Aug 1 – Aug 20",

"date": "2024-08-18",

"last_updated": "2025-08-24"

},

{

"title": "Simon Willison’s Newsletter | Substack",

"url": "https://simonw.substack.com",

"snippet": "Simon Willison’s Newsletter\n\nSubscribe\n\nSign in\n\nHome\n\nArchive\n\nAbout\n\nClaude's new Code Interpreter\n\nPlus: Recreating the Apollo AI adoption rate chart with GPT-5, Python and Pyodide\n\nSep 9\n\n\n\nSimon Willison\n\n27\n\n2\n\nLatest\n\nTop\n\nDiscussions\n\nGPT-5 Thinking in ChatGPT (aka Research Goblin) is shockingly good at search\n\nAnd why the $1.5bn Anthropic books settlement may count as a win for Anthropic\n\nSep 7\n\n\n\nSimon Willison\n\n50\n\n2\n\nV&A East Storehouse and Operation Mincemeat in London\n\nPlus DeepSeek 3.1, gpt-realtime, and prompt injection against browser agents\n\nSep 2\n\n\n\nSimon Willison\n\n21\n\nPrompt injections as far as the eye can see\n\nPlus open weight LLM performance across providers, Qwen Image Edit, Gemma 3 270M\n\nAug 21\n\n\n\nSimon Willison\n\n37\n\nLLM 0.27, with GPT-5 and improved tool calling\n\nQwen3-4B-Thinking: \"This is art - pelicans don't ride bikes!\"\n\nAug 12\n\n\n\nSimon Willison\n\n23\n\n1\n\nGPT-5: Key characteristics, pricing and model card... Plus OpenAI's open weight models and a bunch more stuff about ChatGPT\n\nAug 8\n\n\n\nSimon Willison\n\n39\n\n6\n\nReverse engineering some updates to Claude\n\nPlus Qwen 3 Coder Flash, Gemini Deep Think, kimi-k2-turbo-preview\n\nAug 1\n\n\n\nSimon Willison\n\n32\n\n1\n\nMy 2.5 year old laptop can write Space Invaders in JavaScript now, using GLM-4.5 Air and MLX\n\nPlus the system prompt behind the new ChatGPT study mode\n\nJul 30\n\n\n\nSimon Willison\n\n32\n\n4\n\nSee all\n\nSimon Willison’s Newsletter\n\nAI, LLMs, web engineering, open source, data science, Datasette, SQLite, Python and more\n\nSubscribe\n\nRecommendations\n\nView all 7\n\nLatent.Space\n\nLatent.Space\n\nOne Useful Thing\n\nEthan Mollick\n\ntype click type\n\nbrian grubb\n\nExponential View\n\nAzeem Azhar\n\nImport AI\n\nJack Clark\n\nSimon Willison’s Newsletter\n\nSubscribe\n\nAbout\n\nArchive\n\nRecommendations\n\nSitemap\n\n© 2025 Simon Willison\n\nPrivacy\n\n\n\nTerms\n\n\n\nCollection notice\n\nStart writing\n\nGet the app\n\nSubstack\n\nis the home for great culture\n\nThis site requires JavaScript to run correctly. Please",

"date": "2025-09-09",

"last_updated": "2025-09-16"

},

{

"title": "Musings in a wired world",

"url": "https://simonwilson.net",

"snippet": "## Latest Posts\n\n\n\n### Italian Traffic–A Metaphor\n\nHaving recently returned from a wonderful time in the Bay of Naples for our anniversary, I was reminded once again of the madness that is Italian driving. With Italian driving there is always a lot of noise, activity, and speed… Continue reading\n\n\n\n### 25th Anniversary\n\nIt will quiet around here for a few days as my wife and I have the pleasure of celebrating our 25th wedding anniversary, with a trip to Italy, and more specifically the Bay of Naples to see the sites around… Continue reading\n\n\n\n### Dennis Ritchie–RIP\n\nJust read Herb Sutter’s post that Dennis passed away. This is another huge loss for the technology scene over recent weeks. For me, arguably even more pertinent and more relevant than Steve Jobs. Even though I respect what Steve did… Continue reading\n\n\n\n### And this is why Steve Jobs mattered\n\nSteve Jobs–The Crazy Ones Of all the tributes flowing across the web this morning this is probably the one that means most to me, simply put Steve made a difference to this world. And my question to me, and all… Continue reading\n\n\n\n### Phone Hacking and Security Awareness or Not\n\nIt’s enough to send a techie mad, all this talk about phone hacking. We all know that it is not really about hacking a person’s phone but simply getting access to their voicemail by assuming that the majority of people… Continue reading... ### Ctrl-Alt-Del\n\nWell it’s time to reboot this blog, it has lain in lain in hibernation now for more than 2 years and it is not as if I don’t have anything to say. It’s just that, as often is the case,… Continue reading\n\n\n\n### Professions ‘reserved for rich’?\n\nWarning! Potential political points may be made! The BBC recently published an article suggesting that Professions ‘reserved for rich’ discussing the fact that top professions such as law and medicine are in fact being reserved for a smaller rather than… Continue reading\n\n\n\n### Google Chrome and IE – is it war?\n\nWhile catching up on news over the weekend I came across Boagworld’s well reasoned post “Can Google Chrome topple IE?”. He asks “The question is whether we will need to start testing our sites in Chrome? Well, take has been… Continue reading\n\n\n\n### Join In – Save Jodrell Bank\n\nAgain we have the problem of the UK missing the point in putting Science development on the Agenda with the plan to remove critical funding for Jodrell Bank’s future in being able to make new discoveries. For the sake of… Continue reading",

"date": "2011-11-23",

"last_updated": "2025-01-28"

},

{

"title": "Hacker News",

"url": "https://news.ycombinator.com/item?id=42605913",

"snippet": "throwawaystress 7 months ago\n\nHow the heck does he have time to post all that amazing stuff, AND be coding open-source, AND have some kind of day job?\n\nMy god, I wish I were that productive.\n\nfunksta 7 months ago\n\nI will add a +1 to your recommendation as well, his blog has been my favourite way to keep up with the AI landscape over the last 18 months. Just the right level of detail and technical depth for me\n\nsimonw 7 months ago\n\nI can write fast because I've been writing online for so long. Most short posts take about ten minutes, longer form stuff usually takes one or two hours.\n\nI also deliberately lower my standards for blogging - I often skip conclusions, and I'll publish a piece when I'm still not happy with it (provided I've satisfied myself with the fact checking side of things - I won't dash something out if I'm not certain it's true, at least to the best of my ability.)\n\nI'm hoping to improve my overall balance a lot for 2025. Deliberately ending my at least one post a day blogging streak is part of that: https://simonwillison.net/2025/Jan/2/ending-a-year-long-post...\n\nedanm 7 months ago... The writing, I understand - you do it relatively quickly because of a lot of practice. But I feel like just reading up on the AI news every week takes up a significant amount of time - time that can't be spent researching/building things.\n\nI'm wondering how you balance that.\n\nI've managed to balance building vs writing a lot better in the past - I lost that balance in November and December, I'm trying to get it back for January.\n\nHaving some kind of standard \"I need to integrate this new thing with an existing codebase\" makes a great standard project.\n\nmarojejian 7 months ago\n\npunkspider 7 months ago\n\nDavidPiper 7 months ago\n\nThere are for sure ways to increase your own personal productivity on its own, but the extra kick is usually from in-house cooks, cleaners, shoppers, schedulers, stylists, PAs, etc.\n\nThese people may or may not be spouses, family, friends and so on.\n\n(This is a general response, I do not know Simon Willison or any of his work or life.)\n\nWe do have a couple of hours of cleaning help once a week but other than that my partner and I split the chores.\n\nDavidPiper 6 months ago\n\nidamantium 7 months ago... My brother is an \"influencer\" in the legit sense that he makes all his money from having a following (mostly through brand partnerships). He only gets help for very specific tasks on a project-by-project basis and even then he doesn't do that very often. He loves working alone and the freedom that comes from that.\n\nhttps://unnecessaryinventions.com/about-ui/\n\npolishdude20 7 months ago\n\npowersnail 7 months ago\n\n\n\nHow the heck does he have time to post all that amazing stuff, AND be coding open-source, AND have some kind of day job?\n\nMy god, I wish I were that productive.",

"date": "2025-01-05",

"last_updated": "2025-08-07"

},

{

"title": "Archive - Simon Willison's Newsletter",

"url": "https://simonw.substack.com/archive",

"snippet": "Simon Willison’s Newsletter\n\nSubscribe\n\nSign in\n\nHome\n\nArchive\n\nAbout\n\nLatest\n\nTop\n\nDiscussions\n\nGPT-5-Codex, plus updated Gemini 2.5 Flash and Flash Lite\n\nAnd a flurry of new models from Qwen\n\n5 hrs ago\n\n\n\nSimon Willison\n\n8\n\nI think \"agent\" may finally have a widely enough agreed upon definition to be useful jargon now\n\nPlus GPT-5-Codex, Qwen3-Next-80B, Claude Memory and more\n\nSep 18\n\n\n\nSimon Willison\n\n64\n\n6\n\nClaude's new Code Interpreter\n\nPlus: Recreating the Apollo AI adoption rate chart with GPT-5, Python and Pyodide\n\nSep 9\n\n\n\nSimon Willison\n\n35\n\n2\n\nGPT-5 Thinking in ChatGPT (aka Research Goblin) is shockingly good at search\n\nAnd why the $1.5bn Anthropic books settlement may count as a win for Anthropic\n\nSep 7\n\n\n\nSimon Willison\n\n55\n\n3\n\nV&A East Storehouse and Operation Mincemeat in London\n\nPlus DeepSeek 3.1, gpt-realtime, and prompt injection against browser agents\n\nSep 2\n\n\n\nSimon Willison\n\n22\n\nAugust 2025... Prompt injections as far as the eye can see\n\nPlus open weight LLM performance across providers, Qwen Image Edit, Gemma 3 270M\n\nAug 21\n\n\n\nSimon Willison\n\n37\n\nLLM 0.27, with GPT-5 and improved tool calling\n\nQwen3-4B-Thinking: \"This is art - pelicans don't ride bikes!\"\n\nAug 12\n\n\n\nSimon Willison\n\n23\n\n1\n\nGPT-5: Key characteristics, pricing and model card\n\nPlus OpenAI's open weight models and a bunch more stuff about ChatGPT\n\nAug 8\n\n\n\nSimon Willison\n\n39\n\n6\n\nReverse engineering some updates to Claude\n\nPlus Qwen 3 Coder Flash, Gemini Deep Think, kimi-k2-turbo-preview\n\nAug 1\n\n\n\nSimon Willison\n\n32\n\n1\n\nJuly 2025\n\nMy 2.5 year old laptop can write Space Invaders in JavaScript now, using GLM-4.5 Air and MLX\n\nPlus the system prompt behind the new ChatGPT study mode\n\nJul 30\n\n\n\nSimon Willison\n\n32\n\n4\n\nUsing GitHub Spark to reverse engineer GitHub Spark\n\nPlus three huge new open weight model releases from Qwen\n\nJul 26\n\n\n\nSimon Willison... 36\n\n1\n\nVibe scraping on an iPhone with OpenAI Codex\n\nPlus celebrating Django's 20th birthday\n\nJul 18\n\n\n\nSimon Willison\n\n39\n\n2\n\n© 2025 Simon Willison\n\nPrivacy\n\n\n\nTerms\n\n\n\nCollection notice\n\nStart writing\n\nGet the app\n\nSubstack\n\nis the home for great culture\n\nThis site requires JavaScript to run correctly. Please\n\nturn on JavaScript\n\nor unblock scripts",

"date": "2025-09-25",

"last_updated": "2025-09-26"

},

{

"title": "Simon Willison: Using LLMs for Python Development | Real Python Podcast #236",

"url": "https://www.youtube.com/watch?v=CH_AQJ2--FI",

"snippet": "##### Jan 24, 2025 (1:22:04)\nWhat are the current large language model (LLM) tools you can use to develop Python? What prompting techniques and strategies produce better results? This week on the show, we speak with Simon Willison about his LLM research and his exploration of writing Python code with these rapidly evolving tools.\n\n👉 Links from the show: https://realpython.com/podcasts/rpp/236/\n\nSimon has been researching LLMs over the past two and a half years and documenting the results on his blog. He shares which models work best for writing Python versus JavaScript and compares coding tools and environments.\n\nWe discuss prompt engineering techniques and the first steps to take. Simon shares his enthusiasm for the usefulness of LLMs but cautions about the potential pitfalls.\n\nSimon also shares how he got involved in open-source development and Django. He's a proponent of starting a blog and shares how it opened doors for his career. \n\nThis episode is sponsored by Postman.\n\nTopics:... - 00:00:00 -- Introduction\n- 00:02:38 -- How did you get involved in open source?\n- 00:04:04 -- Writing an XML-RPC library\n- 00:04:40 -- Working on Django in Lawrence, Kansas\n- 00:05:31 -- Started building open-source collection\n- 00:06:52 -- shot-scraper: taking automated screenshots of websites\n- 00:08:09 -- First experiences with LLMs\n- 00:10:08 -- 22 years of simonwillison.net\n- 00:18:22 -- Navigating the hype and criticism of LLMs\n- 00:22:14 -- Where to start with Python code and LLMs?\n- 00:26:22 -- Sponsor: Postman\n- 00:27:13 -- ChatGPT Canvas vs Code Interpreter\n- 00:28:23 -- Asking nicely, tricking the system, and tipping?\n- 00:30:35 -- More Code Interpreter and building a C extension\n- 00:32:05 -- More details on Canvas \n- 00:36:55 -- What is a workflow for developing using LLMs?... - 00:39:43 -- Creating pieces of code vs a system\n- 00:42:00 -- Workout program for prompting and pitfalls\n- 00:53:54 -- Video Course Spotlight\n- 00:55:14 -- Why an SVG of a pelican riding a bicycle?\n- 00:57:48 -- Repeating a query and refining\n- 01:03:00 -- Working in an IDE or text editor\n- 01:05:45 -- David Crawshaw on writing code with LLMs \n- 01:08:33 -- Running an LLM locally to write code \n- 01:14:02 -- Staying out of the AGI conversation\n- 01:16:07 -- What are you excited about in the world of Python?\n- 01:18:34 -- What do you want to learn next?\n- 01:19:53 -- How can people follow your work online?\n- 01:20:51 -- Thanks and goodbye\n\n👉 Links from the show: https://realpython.com/podcasts/rpp/236/... {ts:0} welcome to the real python podcast this is episode 236 what are the large language model tools you can use to develop python what\n{ts:11} prompting techniques and strategies produce better results this week on the show we speak with Simon Willison about his llm research and exploring writing python code with these rapidly changing tools Simon's been researching llms over the past 2 and a half years and documenting the results on blog he\n{ts:31} shares Which models work best for writing python versus JavaScript and Compares coding tools and environments we discuss prompt engineering techniques and the first steps to take Simon shares his enthusiasm for the usefulness of llms but cautions about the potential pitfalls he also shares how he got\n{ts:50} involved in open source development and Jango he's a proponent of starting a blog and shares how it opened doors for his career this episode is sponsored by Postman Postman is the world's leading API collaboration platform and they just launched Postman AI agent Builder the\n{ts:71} quickest way to build AI agents start building at post man.com SLP podcast python all right let's get started [Music] the real python podcast is a weekly conversation about using python in the real world my name is Christopher Bailey... {ts:106} your host each week we feature interviews with experts in the community and discussions about the topics articles and courses found at real python. after the podcast join us and learn real world python skills with a community of experts at real python. comom hey Simon welcome to the show hey\n{ts:124} it's really exciting to be here yeah I'm stoked to talk to you uh I've been mentioning that I've been wanting to get you on the show for a while and we definitely have referenced a bunch of stuff that you've written about throughout the last few years and you're sort of diving into the world of llms and this sort of research and stuff that you do I I can't tear myself away from\n{ts:143} it it's all far too interesting and weird it's like yeah yeah no other area of computer science I've ever dealt with before okay so that's uh maybe part of what we'll dig into is like the sort of fascination of like why why did you get into this and stuff like that what I'd like to start with and I've done this with a handful of people recently is not\n{ts:162} go so far back into like well how'd you get into computer programming but more how did you get involved in open source because you do a lot of Open Source projects in fact I I featured one of your projects recently the shot scraper which I think is a really awesome tool on the show recently and I just feel like you are able to get people people... 's the simplest like little command online ra I can put around this lightwe that does all of the work and I built that yeah and that's a trick I use a lot like the secret to my productivity is I'm really good at spotting opportunities where it's like oh for a 100 lines of code I will get a\n{ts:461} significant win so I can knock out that 100 lines of code because I happen to know Python and click and GitHub actions and all of these little bits and pieces and I can find new ways to combine them that build something good so you can see the investment return on investment kind\n{ts:477} of thing pretty easily in your head now after doing 800 projects yeah and then you you just pick the easiest ones you're like okay that for the amount of work I put in I will get a really cool thing out the other end that's worth doing so I don't know exactly when you started to dive into llm stuff was it just right as they started to emerge were you working with early ones back in\n{ts:498} four five six years ago so my very first experiment was with with gpt2 back in 2019 I tried a project I got it to generate New York Times headlines for different decades the idea was that you sort of Feed in a bunch of New York... 're like start searching for something that and then find the result oh I wrote about that clearly that happens to be so much honestly I've got like I said I've got\n{ts:940} 850 projects which means that sometimes I will forget a project exists and I will go looking for a solution something there will be a library that I wrote that I had fallen out of my head that's that's deeply entertaining when that happens yeah yeah do you think it's easier than ever to start a blog well I don't know like one of the things\n{ts:959} I haven't quite got my head around is I keep on hearing rumors that Google doesn't credit new sites nearly as much as it used to but like but these like it's SEO there were rumors about everything so it might be that because my blog has existed effectively for 20 years I've got so much sort of built up like credibility with Google that I get\n{ts:978} yeah credentials I get results and I if that is the case I'd say start your blog now and in 10 years time yeah yeah you know that that that investment starts paying off in terms of the the search ranking although who knows what search will look like in three years time at this point you know yeah I feel like the benefits that you outlined already are there too though the the idea that it... ve got our own ways around this now and the other thing I think that's\n{ts:1054} really important I keep on focusing on the idea of credibility like building credibility is so important like when I'm looking for sources of information I look for people who have earned credibility with me and I want to earn credibility myself with other people and having like 20 years of blog content gives you instant credibility like I can\n{ts:1072} point you to my sqlite tag on my blog which goes back to 2003 when I first heard about sqlite you know right yeah and that so I love that I feel like and that's the kind of thing where credibility is accumulated over time and it doesn't take much like a link blog about a subject run that for six months and you will become one of the top .1%\n{ts:1092} people on Earth for credibility on that subject just from publishing a few notes and linking to a bunch of things about it yeah got to start that's the trick yeah as you started this process of writing about and researching llms I guess maybe we can we can cover some one\n{ts:1109} of these things that you've written about recently at least on social media how people have been saying oh you're a shill about llm which I think is really kind of fascinating because in a way there",

"date": "2025-01-24",

"last_updated": "2025-07-11"

},

{

"title": "Simon Willison: Here's how I use LLMs to help me write code",

"url": "https://www.youtube.com/watch?v=xmX2AkJlhdY",

"snippet": "## Steven Ge\n##### Apr 16, 2025 (0:28:41)\nIn his recent blog (https://simonwillison.net/2025/Mar/11/using-llms-for-code/) , Simon Willison makes the point that writing code with LLM is not easy. This audio is the original post. This is for learning purpose only. Copyright belongs to Simon Willison. \n\n\nUsing LLMs to write code is difficult and unintuitive. It takes significant effort to figure out the sharp and soft edges of using them in this way, and there’s precious little guidance to help people figure out how best to apply them.... {ts:0} from Simon Willis's blog at Simon willis. net posted 11th March 2025 at\n{ts:5} 209 p.m. here's how I use llms to help me write code online discussions about using\n{ts:12} large language models to help write code inevitably produce comments from developers whose experiences have been\n{ts:19} disappointing they often ask what they're doing wrong how come some people are reporting such great results when\n{ts:25} their own experiments have proved lacking using llms to write code is difficult and\n{ts:32} unintuitive it takes significant effort to figure out the sharp and soft edges of using them in this way and there's\n{ts:39} precious little guidance to help people figure out how best to apply them if someone tells you that coding with llms\n{ts:46} is easy they are probably unintentionally misleading you they may well have stumbled onto patterns that\n{ts:52} work but those patterns do not come naturally to everyone I've been getting great results out of llms for code for\n{ts:59} over 2 years now here's my attempt at transferring some of that experience and intuition to you set reasonable\n{ts:66} expectations account for training cuto off dates context is King ask them for options tell them exactly what to do you... {ts:216} major breaking change since October 2023 open AI models won't know about it I gain enough value from llms that I now\n{ts:225} deliberately consider this when picking a library I try to stick with libraries with good stability and that are popular\n{ts:232} enough that many examples of them will have made it into the training data I like applying the principles of\n{ts:238} boring technology innovate on your Project's unique selling points stick with tried and\n{ts:244} tested solutions for everything else llms can still help you work with libraries that exist outside their\n{ts:251} training data but you need to put in more work you'll need to feed them recent examples of how those libraries\n{ts:258} should be used as part of your prompt this brings us to the most important thing to understand when\n{ts:264} working with llms context is King most of the craft of getting good results out of an llm comes down to managing its\n{ts:273} context the text that is part of your current conversation this context isn't just the prompt that you have fed it\n{ts:280} successful llm interactions usually take the form of conversations and the context consists of every message from... 'm too lazy to find it I\n{ts:817} accept all always I don't read the diffs anymore when I get error messages I just copy paste them in with no comment\n{ts:825} usually that fixes it Andre suggests this is not too bad for throwaway weekend projects it's also a fantastic\n{ts:832} way to explore the capabilities of these models and really fun the best way to learn llms is to play with\n{ts:839} them throwing absurd ideas at them and Vibe coding until they almost sort of work is a genuinely useful way to\n{ts:846} accelerate the rate at which you build intuition for what works and what doesn't I've been Vibe coding since\n{ts:853} before Andre gave it a name my Simon to tools GitHub repository has 77 HTML JavaScript apps and six python apps and\n{ts:861} every single one of them was built by prompting llms I have learned so much from\n{ts:867} building this collection and I add to it at a rate of several new prototypes per week you can try most of mine out\n{ts:874} directly on tools. Simon willis. net a GitHub Pages published version of the repo I wrote more detailed notes on some... {ts:882} of these back in October in everything I built with Claude artifacts this week if you want to see the transcript of the\n{ts:888} chat used for each one it's almost always linked to in the commit history for that page or visit the new Califon\n{ts:896} page for an index that includes all of those links a detailed example using Claude Cod hode\n{ts:903} while I was writing this article I had the idea for that tools. Simon willis. netcon page I wanted something I could\n{ts:911} link to that showed the commit history of each of my tools in a more obvious way than GitHub I decided to use that as\n{ts:917} an opportunity to demonstrate my AI assisted coding process for this one I used Claude code\n{ts:924} because I wanted it to be able to run python code directly against my existing tools repository on my laptop running\n{ts:930} the slost command at the end of my session showed me this/ cost total cost 61 total duration 5mth 31 total duration\n{ts:940} wall 17 m 18.7 c the initial project took me just over 17 minutes from start to finish and cost me 61 cents in API... m looking at what it's doing but I've left the implementation details entirely up to the\n{ts:1067} llm the Json looked good to me so I said this is working great write me a new script called build CIF and.pie which\n{ts:1076} looks through that gathered Json file and builds and saves an HTML page the page should be mobile friendly and\n{ts:1082} should list every page with a link to that page and for each one display the commit messages neatly convert new lines\n{ts:1090} to BR and linkify URLs but no other formatting plus the message dates and links to the commits themselves which\n{ts:1097} are in HTTP github.com Simon tools Claude knows how GitHub URLs works so telling it to link to the commits and\n{ts:1105} providing the repo name was enough for it guest gtps tool and/ github.com tools commit laugh d9d f85 c92 7786 B3\n{ts:1117} 44578 for those commit URLs I tend to find Claude has good default taste when it comes to webpage design I said the\n{ts:1125} page should be mobile friendly and left it at that Claude churned away and built me a page that wasn... 't right so I said\n{ts:1133} it's not working right oc. HTML had a bunch of commits but in Califon ml there is only one link and heading for the\n{ts:1140} first commit and the rest are shown within that same block there should be separate HTML chunks with links and\n{ts:1147} formatted dates for each of the other commits also the neatly formatted date should include the hhmm as well as the\n{ts:1154} date this Mo it fixed the bug all on its own leaving just two changes I decided to\n{ts:1159} make it's almost perfect but each page should have the commits displayed in the opposite order oldest first and then one\n{ts:1168} last change the pages are currently listed alphabetically let's instead list them\n{ts:1172} with the most recently modified at the top and that was the entire project here's build Califon dopy and the page\n{ts:1179} it generated came out looking pretty good tools caon there was one remaining task I needed to deploy the new Califon\n{ts:1187} as part of my site but I didn't want to check that new Califon HTML page into the repository itself I wanted a custom... {ts:1386} pages settings interface for my repo and found this option GitHub Pages UI shows your site is live at tools. Simon\n{ts:1393} willis. net deployed 7 minutes ago then under bed and deployment a source menu shows options for GitHub actions or for\n{ts:1401} deploy from a branch selected my repo was set to deploy from a branch so I switched that over to GitHub actions I\n{ts:1410} manually updated my readme.md to add a link to the new Califon page in this commit which triggered another build\n{ts:1417} this time only two jobs ran and the end result was the correctly deployed site only two in progress workflows now one\n{ts:1425} is the test one and the other is the deploy to GitHub pages one I later spotted another bug some of the links\n{ts:1431} inadvertently included tags in their hre equals which I fixed with another 11 Cent Claude code session be ready for\n{ts:1439} the human to take over I got lucky with this example because it helped illustrate my final\n{ts:1445} Point expect to need to take over llms are no replacement for human intuition and experience I",

"date": "2025-04-16",

"last_updated": "2025-07-19"

},

{

"title": "Posts tagged Simon Willison",

"url": "https://www.timbornholdt.com/blog/tags/simon-willison",

"snippet": "I’m writing about this today because it’s been one of my “can LLMs do this reliably yet?” questions for over two years now. I think they’ve just crossed the line into being useful as research assistants, without feeling the need to check everything they say with a fine-tooth comb.\n\nI still don’t trust them not to make mistakes, but I think I might trust them enough that I’ll skip my own fact-checking for lower-stakes tasks.\n\nThis also means that a bunch of the potential dark futures we’ve been predicting for the last couple of years are a whole lot more likely to become true. Why visit websites if you can get your answers directly from the chatbot instead?\n\nThe lawsuits over this started flying back when the LLMs were still mostly rubbish. The stakes are a lot higher now that they’re actually good at it!\n\nI can feel my usage of Google search taking a nosedive already. I expect a bumpy ride as a new economic model for the Web lurches into view.\n\nI keep thinking of the quote that “information wants to be free”.\n\nAs the capabilities of open-source LLMs continue to increase, I keep finding myself wanting a locally-running model at arms length any time I’m near a computer.\n\nHow many more cool things can I accomplish with computers if I can always have a “good enough” answer at my disposal for virtually any question for free?",

"date": "2024-01-01",

"last_updated": "2025-07-26"

}

],

"id": "64dc1a5d-247f-478a-bcd7-1f683d852aaa",

"server_time": null

}

Basic Usages

You can pass the following param in the payload to get your desired output.

| Parameter | Type | Description | Example Value |

|---|---|---|---|

| query | string/list | Search query (single string or list for multi-query search) | "latest AI developments 2024"["artificial intelligence trends 2024", "machine learning breakthroughs recent"] |

| max_results | integer | Number of search results to return (1–20, default 10) | 5 |

| max_tokens_per_page | integer | Max content tokens extracted per result (default 1024) | 1024, 2048 |

| country | string | ISO 3166-1 alpha-2 country code to localize results | "US" |