How to serve Markdown instead of HTML for AI Agents

Bun.sh team follows a really interesting technique to make their docs LLM friendly.

Basically, when a AI agent (claude code in this case) fetches their docs they reply with markdown instead of HTML.

They claim that this approach reduces token usage by 10x. And yeah, we all know that LLMs are really good with markdown.

When Claude Code fetches Bun’s docs, Bun’s docs now send markdown instead of HTML by default

— Bun (@bunjavascript) September 27, 2025

This shrinks token usage for our docs by about 10x pic.twitter.com/cvasTo6h43

How does it work?

AI Agents don’t include text/html in their Accept header.

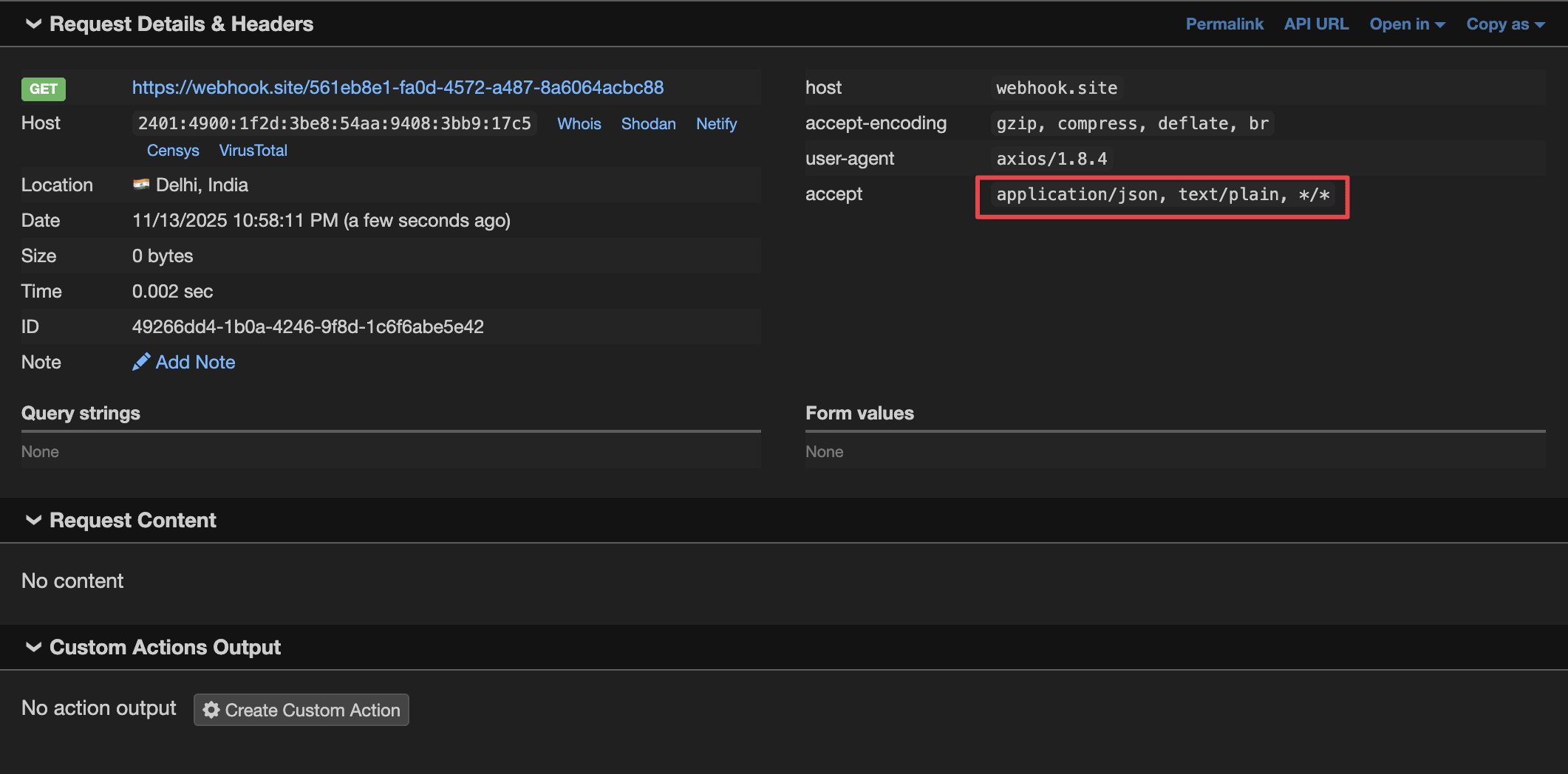

For example, when fetching a URL, Claude Code sends a custom Accept header

Instead of the default, */* they send application/json, text/plain, */*

And Bun team uses this to identify the request and serve the content accordingly.

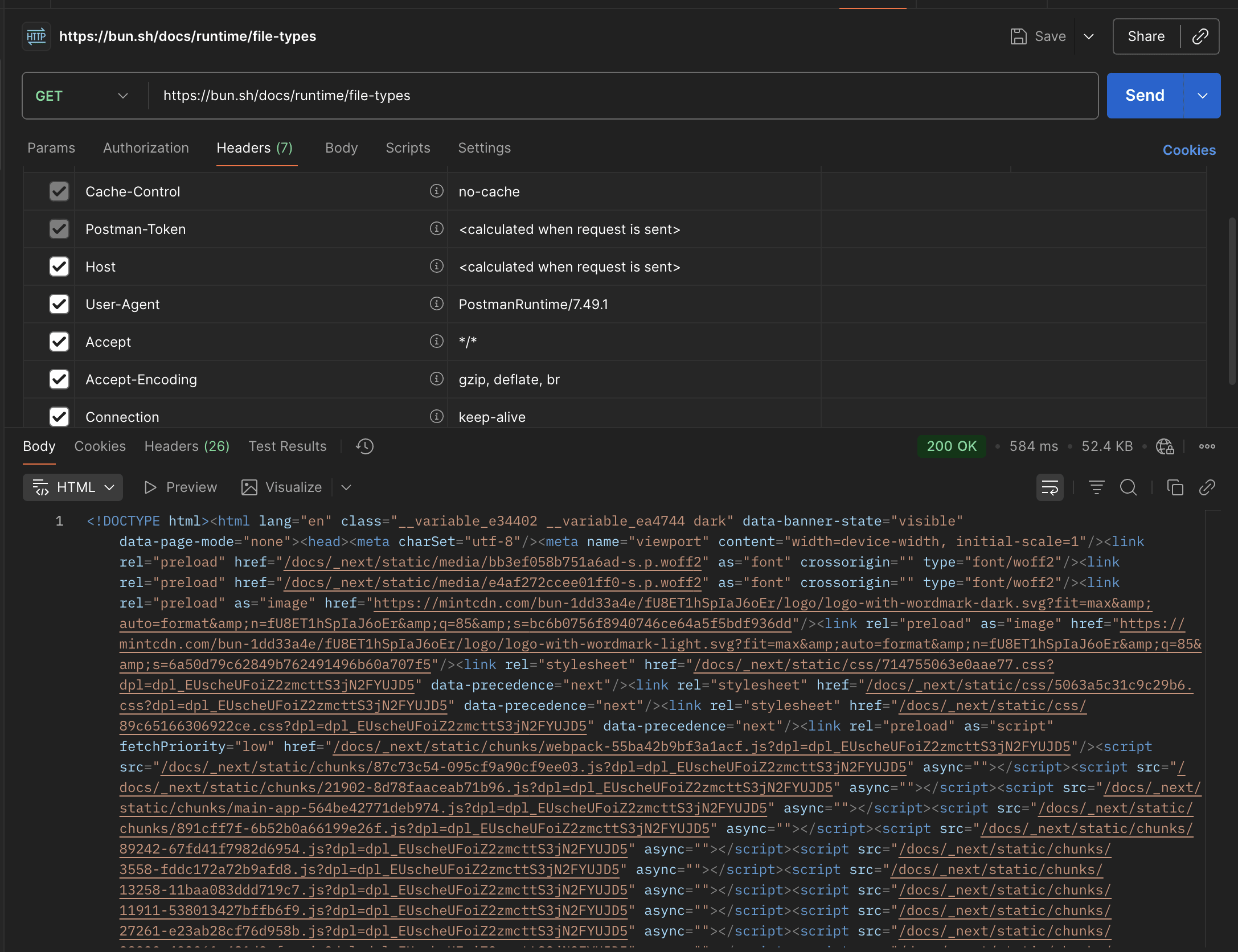

For example, this is the response that we get if we try to fetch a doc

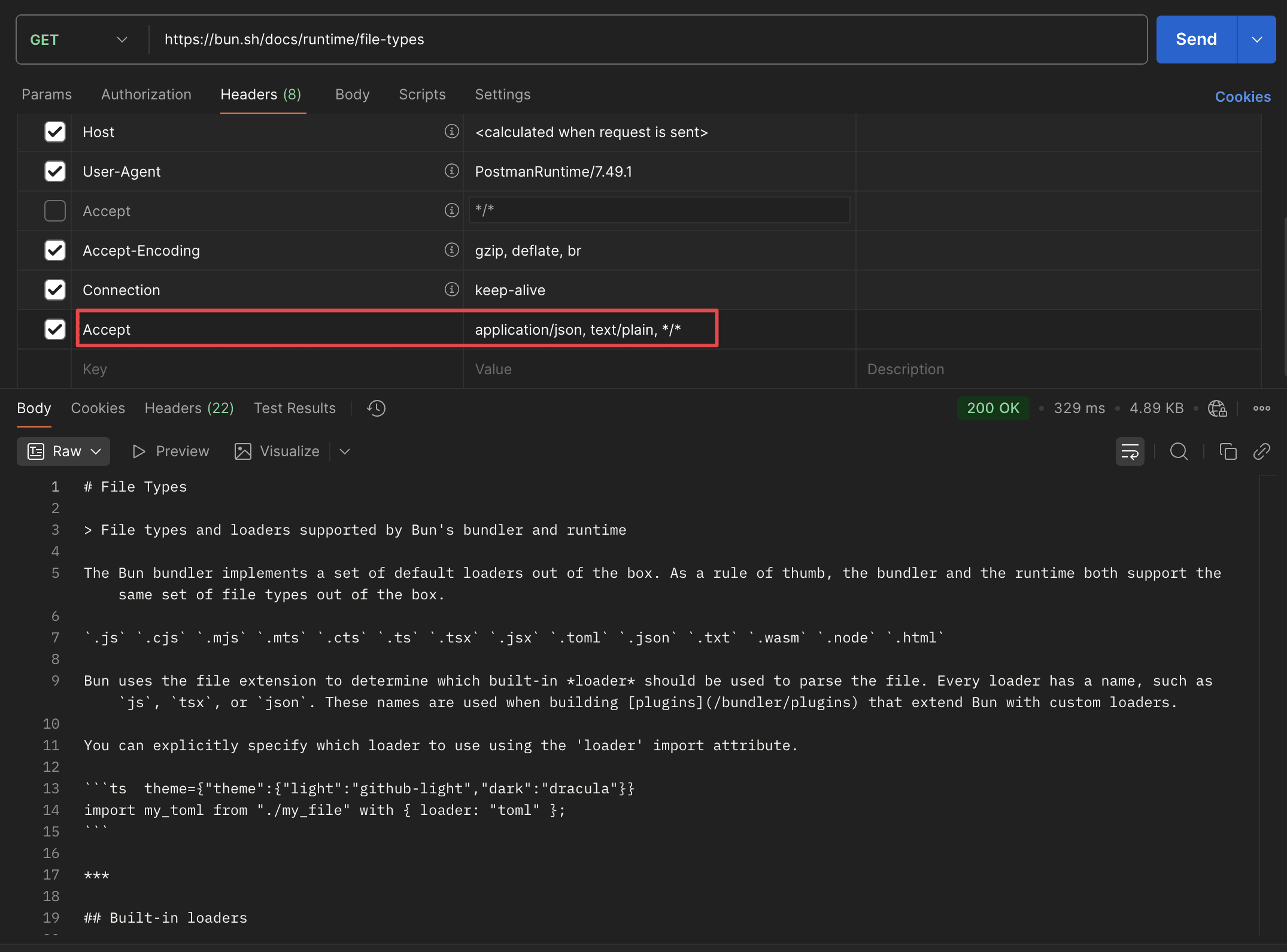

And by sending Accept: application/json, text/plain, */* header, we’re getting markdown.

What’s next?

And Claude Code team claims that they’ll start sending Accept: “text/markdown" then making a request in the upcoming versions.

Reference

- https://x.com/bcherny/status/1988860326306087102

- https://x.com/bunjavascript/status/1971934734940098971

Happy optimizing docs!