TLDR: Why AI Agent is Not Just Another Buzzword by Chip Huyen

I recently watched Chip Huyen’s talk on challenges of building AI Agents and how to overcome them.

It’s a good one, I would recommend anyone to check that out if they’ve done before.

Here are some of the key insights/things that I’ve learned from the talk 👇

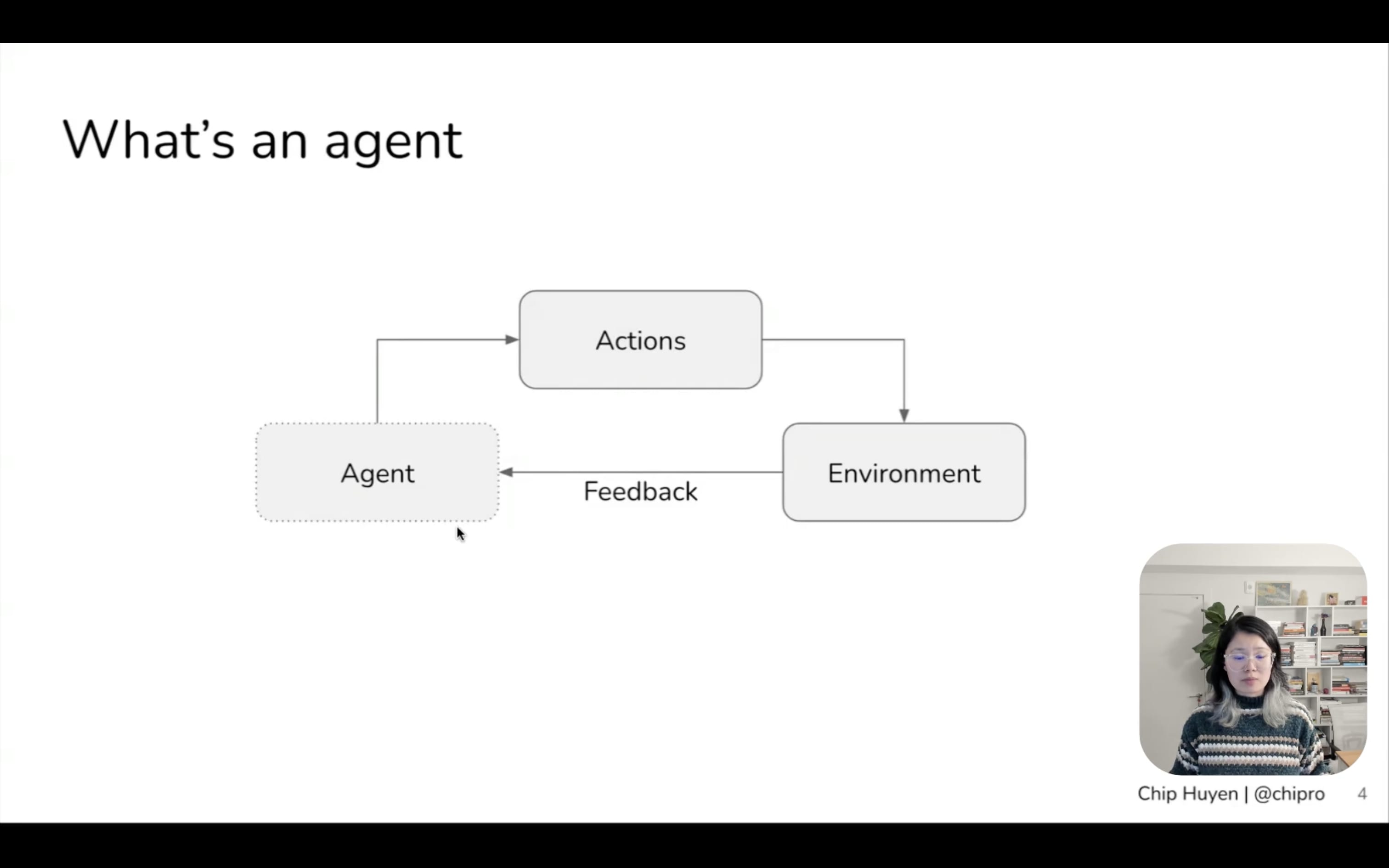

What’s an agent?

Anything that perceives environment and acts on the environment.

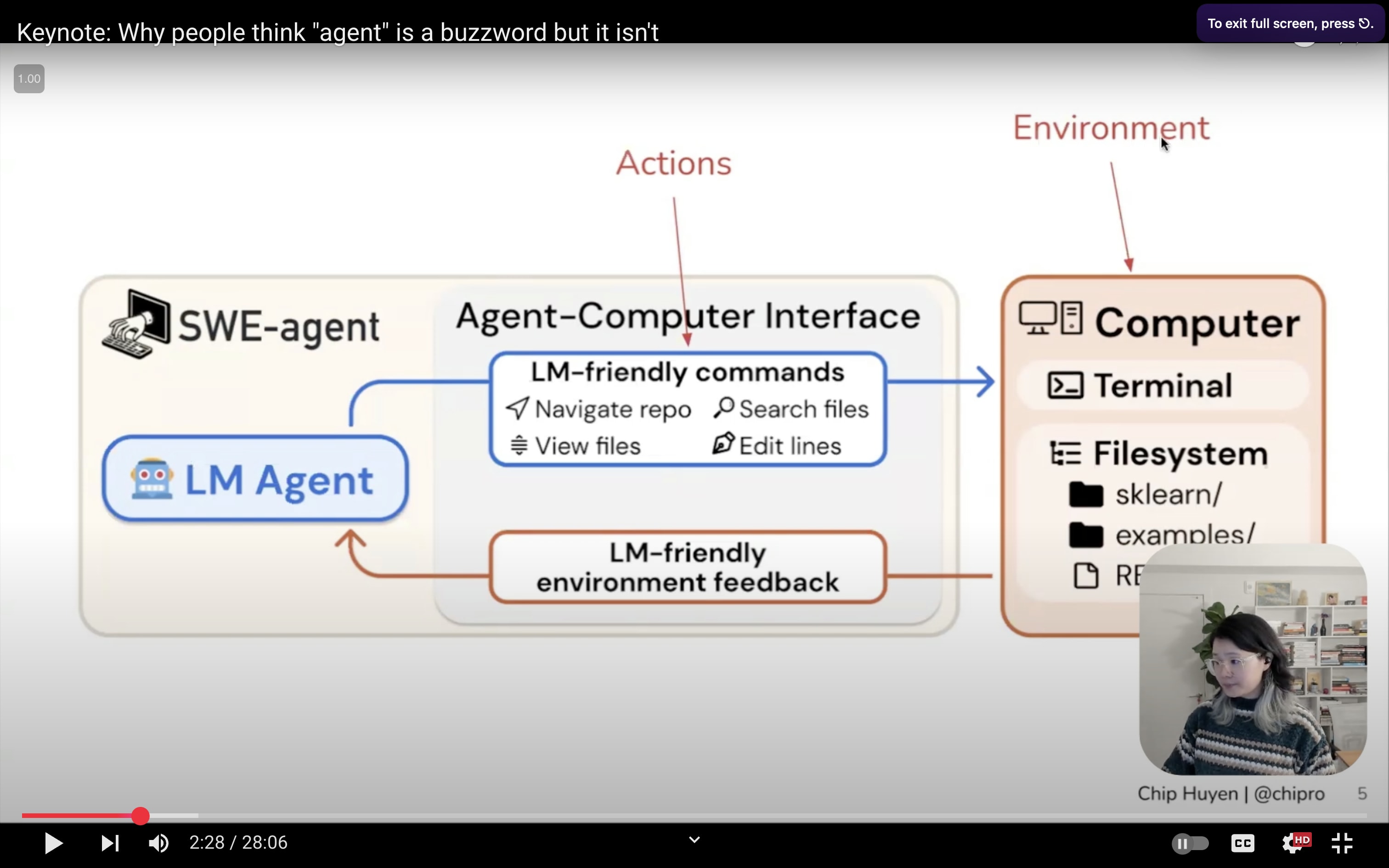

Access to Tools

Giving a model more actions expands its environment and environment determines kind of a action an Agent can perform

| Agent Type | Environment | Actions | Key Interactions |

|---|---|---|---|

| Chess AI | Chessboard | Move pieces | Game state analysis |

| Coding Assistant | IDE, Files | Code generation | File system, terminal |

| Language model | Text | Processes text | Interacts with text |

| Language model (with access to image captioning model) | Text and Images | Processes text and images | Interacts with text and images |

Why Use Agents?

- Address Model Limitations

- Help overcome from knowledge cutoff dates by using external tools/API like Exa.ai

- Create Multimodal Models

- Agents can turn text or image-only models into multimodal models by giving them access to tools that process different types of data

- Eg: Image to caption, PDF to text, etc

- Workflow Integration

- Agents can be integrated into daily tasks by giving them access to tools like IDE, inboxes and calendars, etc

Challenges of Building Agents 🤖

Despite the potential, building agents is challenging:

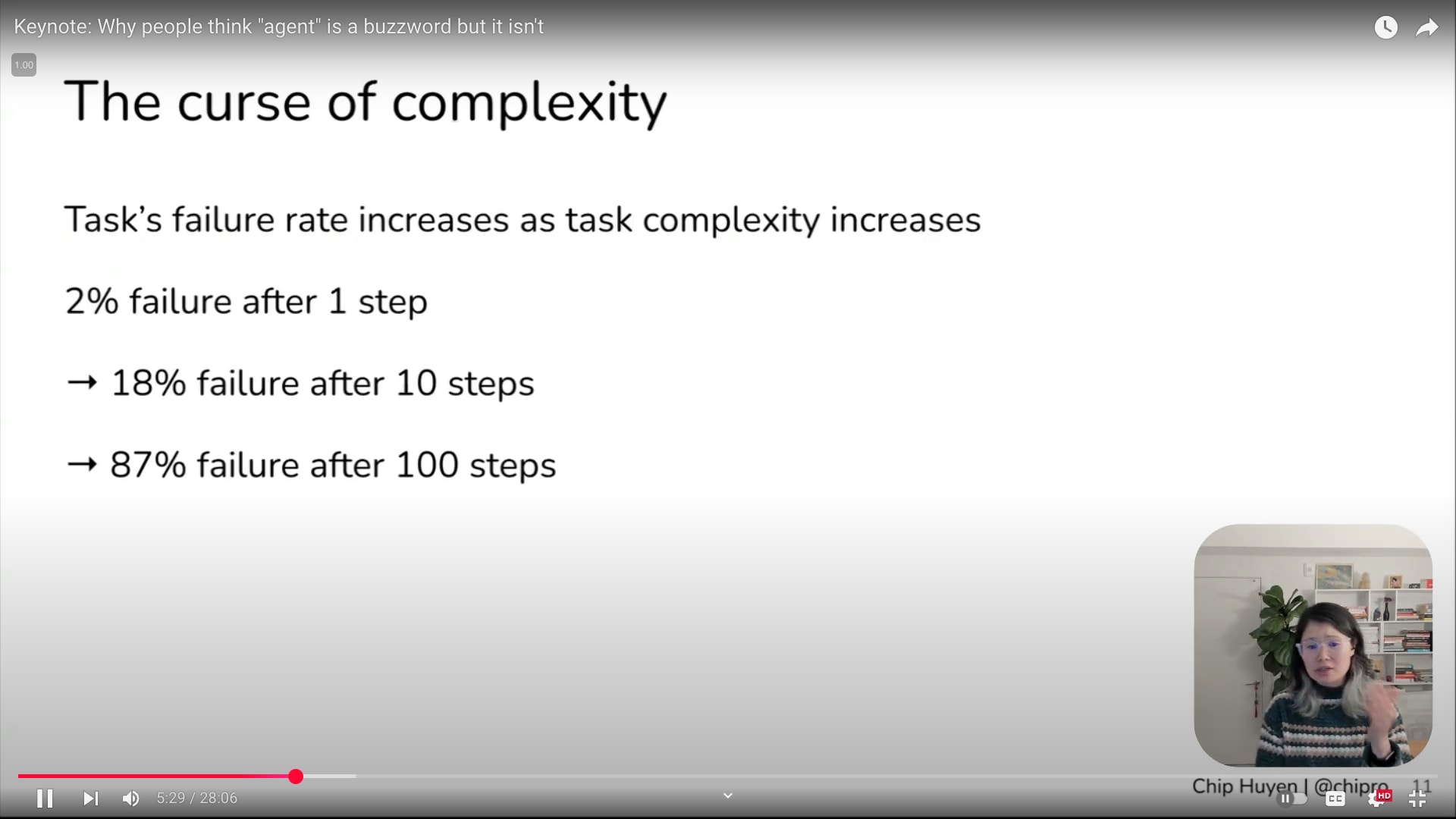

1. Complexity

What is task Complexity? Number of steps needed to solve a task.

The Curse of Complexity: 😅

- Task failure rates increases as task complexity grows.

- Many agents use multiple steps which in-turn increases the likelihood of failure.

- Not just for AI even for human tasks

- It’s kind of chicken-and-egg problem: An agent often need to perform multiple steps to accomplish a task

- Simple tasks don’t need agents

- Simple tasks have low economic values

Example:

Though the question seems simple, under the hood a agent need to perform multiple steps

| Task | Plan |

|---|---|

| How many people bought products from company X last week? | 1. getProductList 2. getOrderCount 3. sum 4. generateResponse |

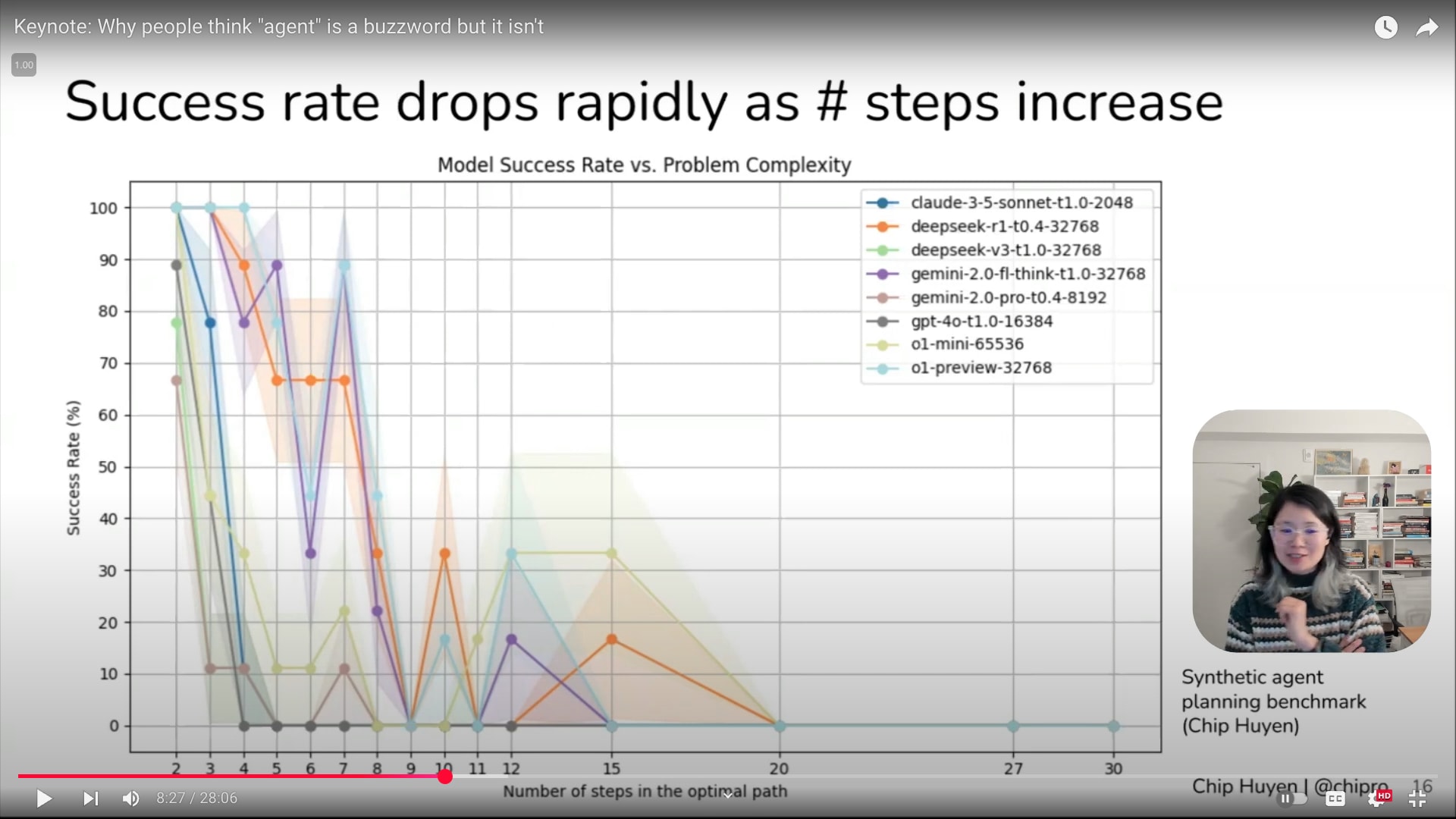

“Most successful agent use-cases involve <= 5 steps” - Chip Huyen

Prediction: Enabling agents to handle more complexity will unlock many new use uses.

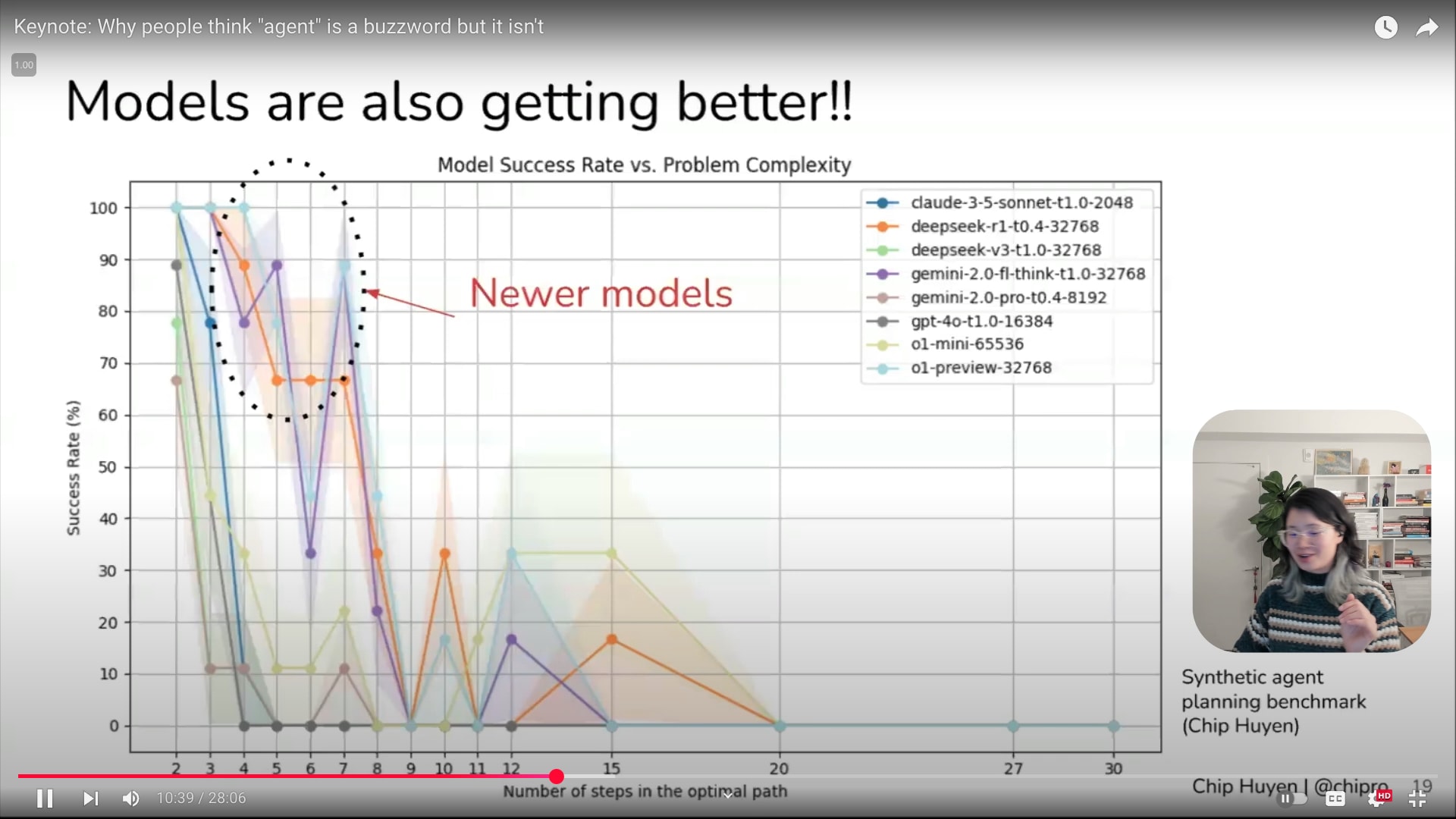

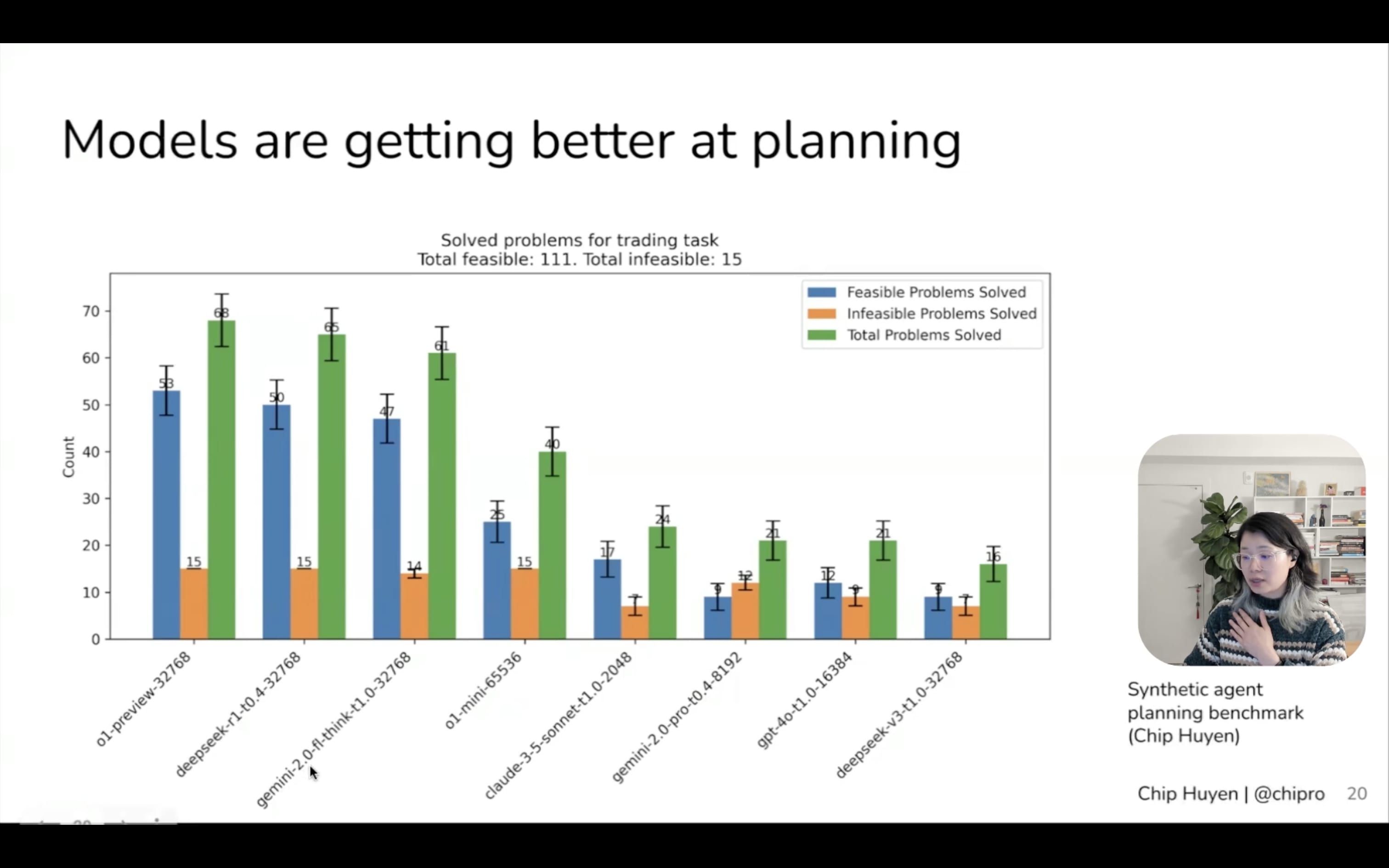

In her benchmark, Most models can solve at most 5 steps and after 10 steps most model will fail.

And new models are getting better 💪

[Tip] How to make Agent handle more complex tasks?

- Break tasks into sub tasks that agent can solve.

- If a task requires 6 steps and agent can only plan 3 step ahead, break the task into 2 subtasks

- Test-time compute scaling - Give model more processing power during inference (reasoning models) so that it can use more compute tokens, so it can think more. Generate more results and pick the one that is more relavent.

- Use stronger models - Train time compute scaling

Tool Use 🔨

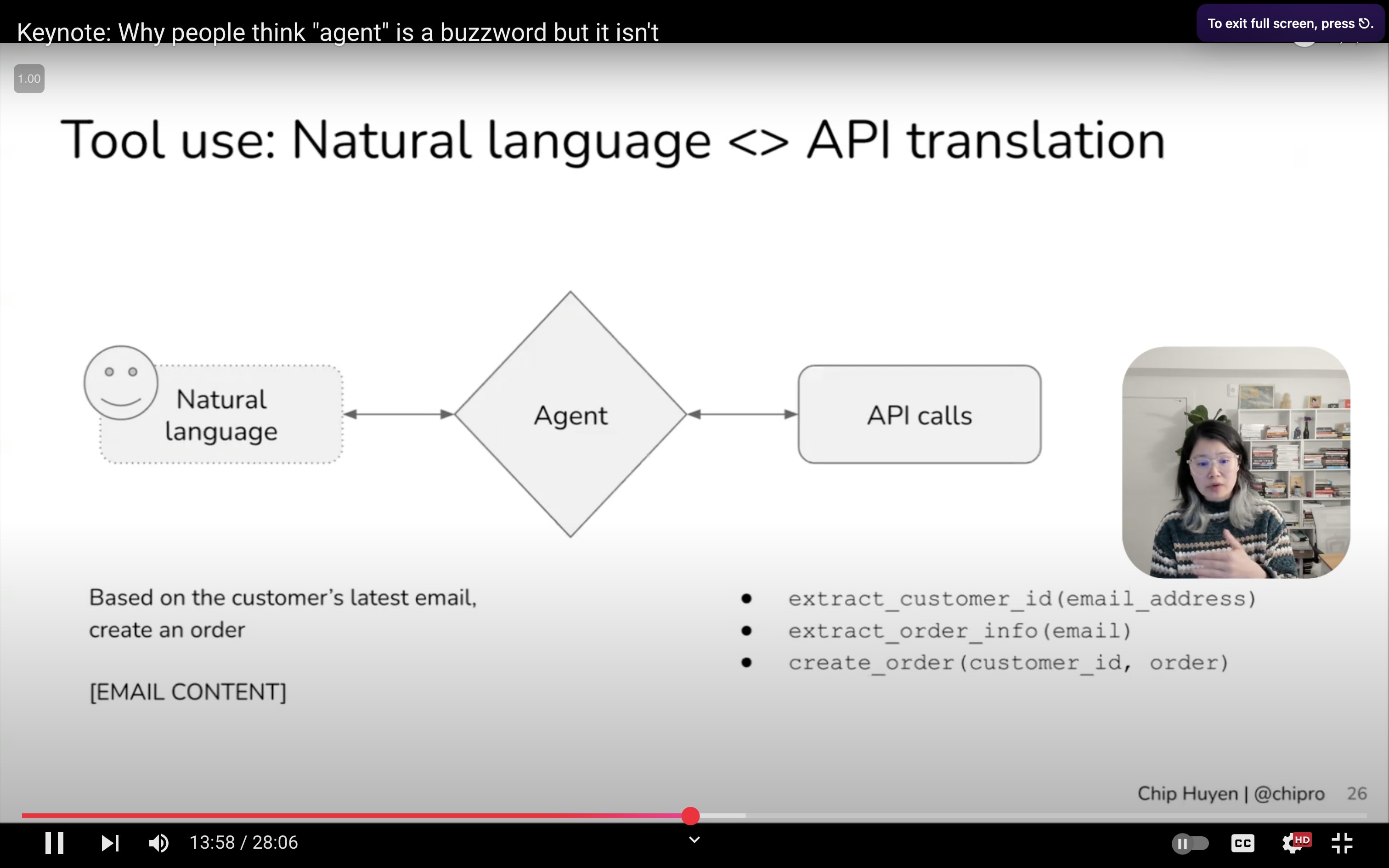

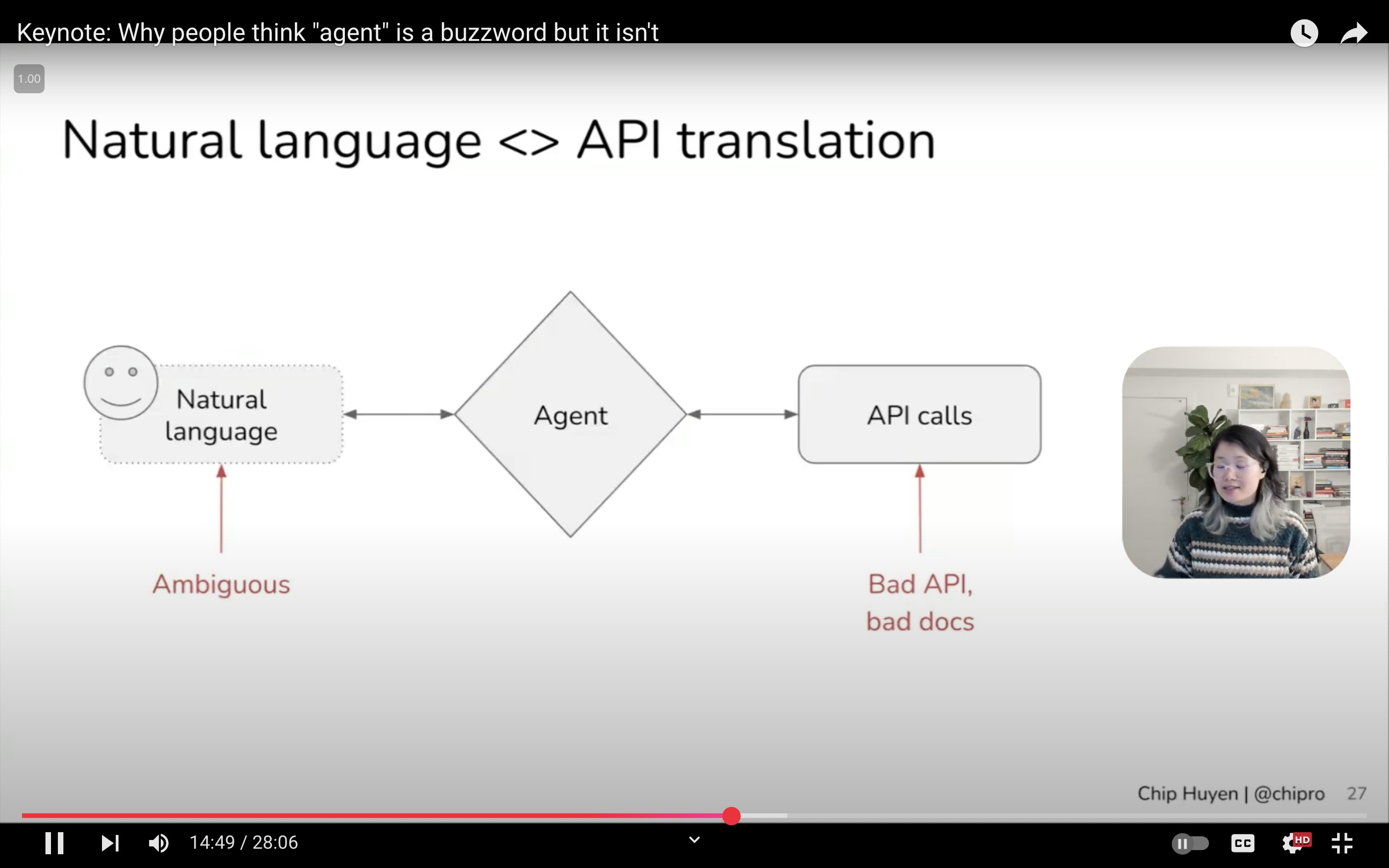

What is tool use?

In simple terms, It’s like a Natural Language <> API translation

Challenges comes from both sides of the translation:

- Natural language is extremely ambiguous

- On API side, we might have very bad API or very bad documentations

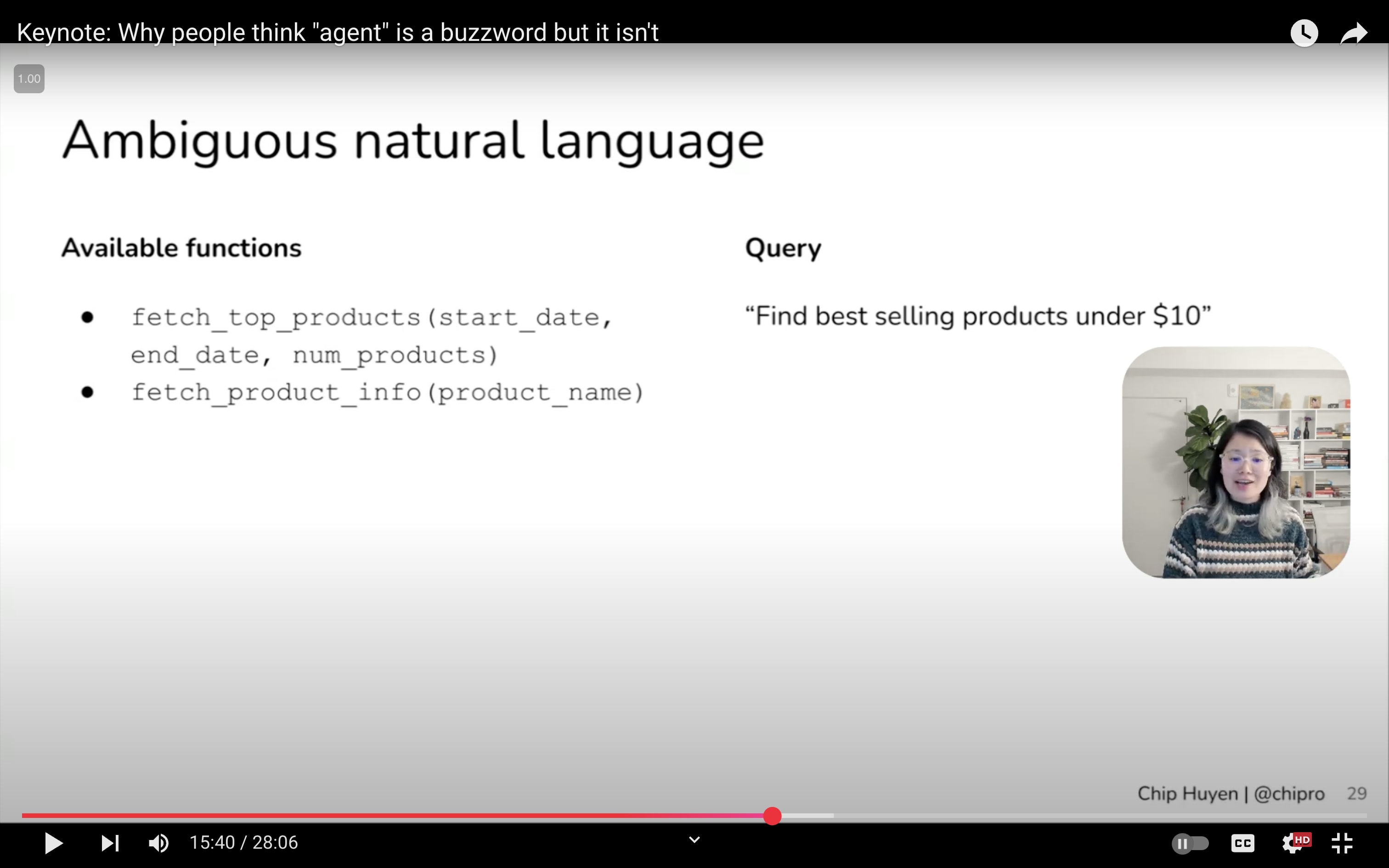

Nuances in seemingly Simple Questions

Even a simple question “Find best selling products under $10” seems straightforward it has lot of nuances under the hood.

Documentation ✍️

If we can’t explain the functionality to the AI agent. It is going to be really really hard for the agent to pick the right one

Our documentation needs to be more detailed as possible.

- What the function does

- Parameter descriptions

- Error codes

- What causes the error?

- What to do if you encounter it?

- Expected returned values

- How to interpret returned values?

- If the returned value is 1. What does it mean?

AI’s tool use !== Human’s tool use

Given a task, what the human annotator does might not be optimal for AI

| Aspect | Human | AI |

|---|---|---|

| Interface | GUIs | APIs |

| Mode of Operation | Sequential | Parallel |

[Tip] How to make agent better at tool use?

- Create very good documentation with function descriptions, parameter details, and error codes

- Give agents narrow, well-defined functions

- Use query rewriting and intent classifiers to resolve ambiguity

- Instruct agent to ask for clarification when unsure

- Build specialised action models for specific queries and APIs

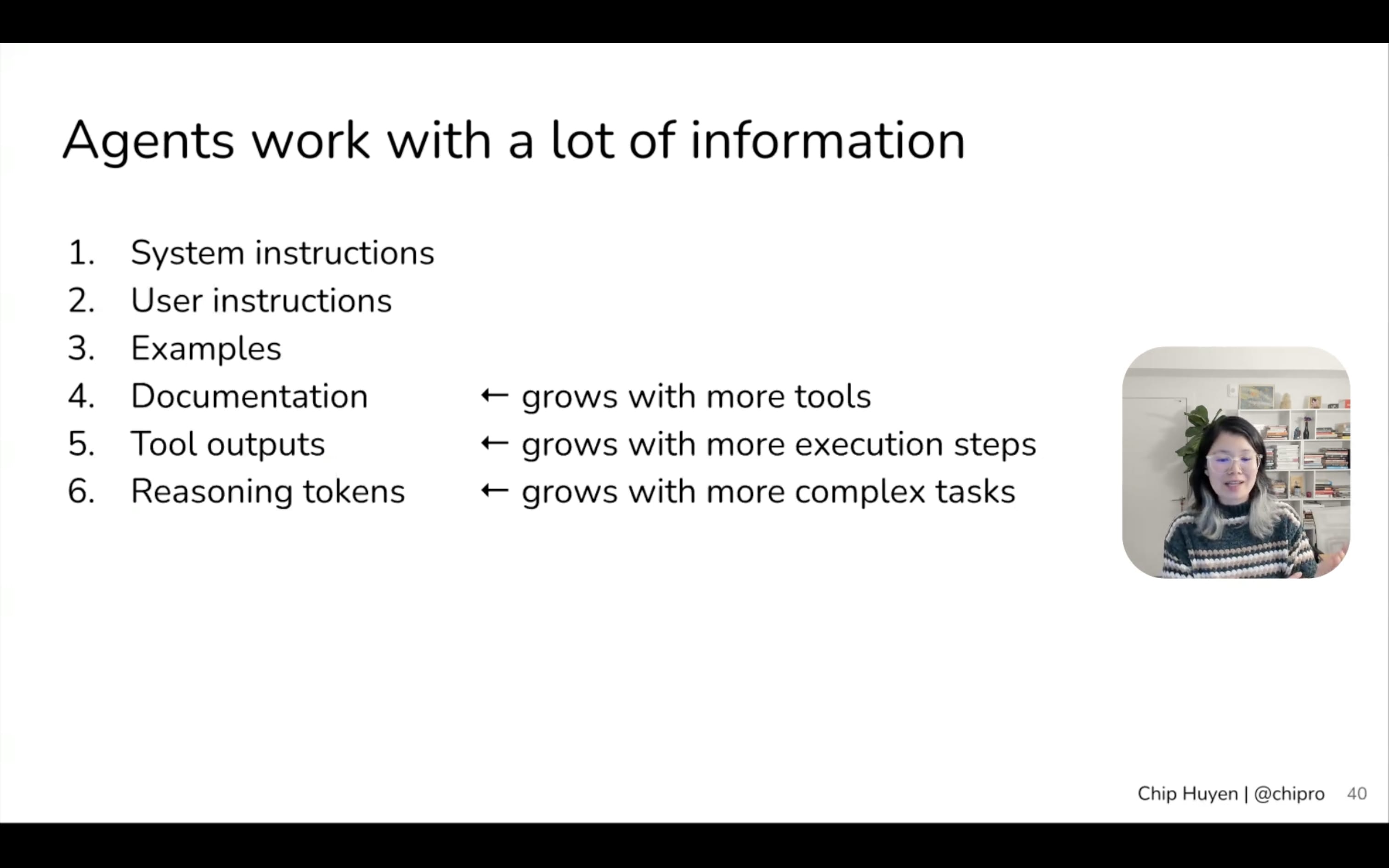

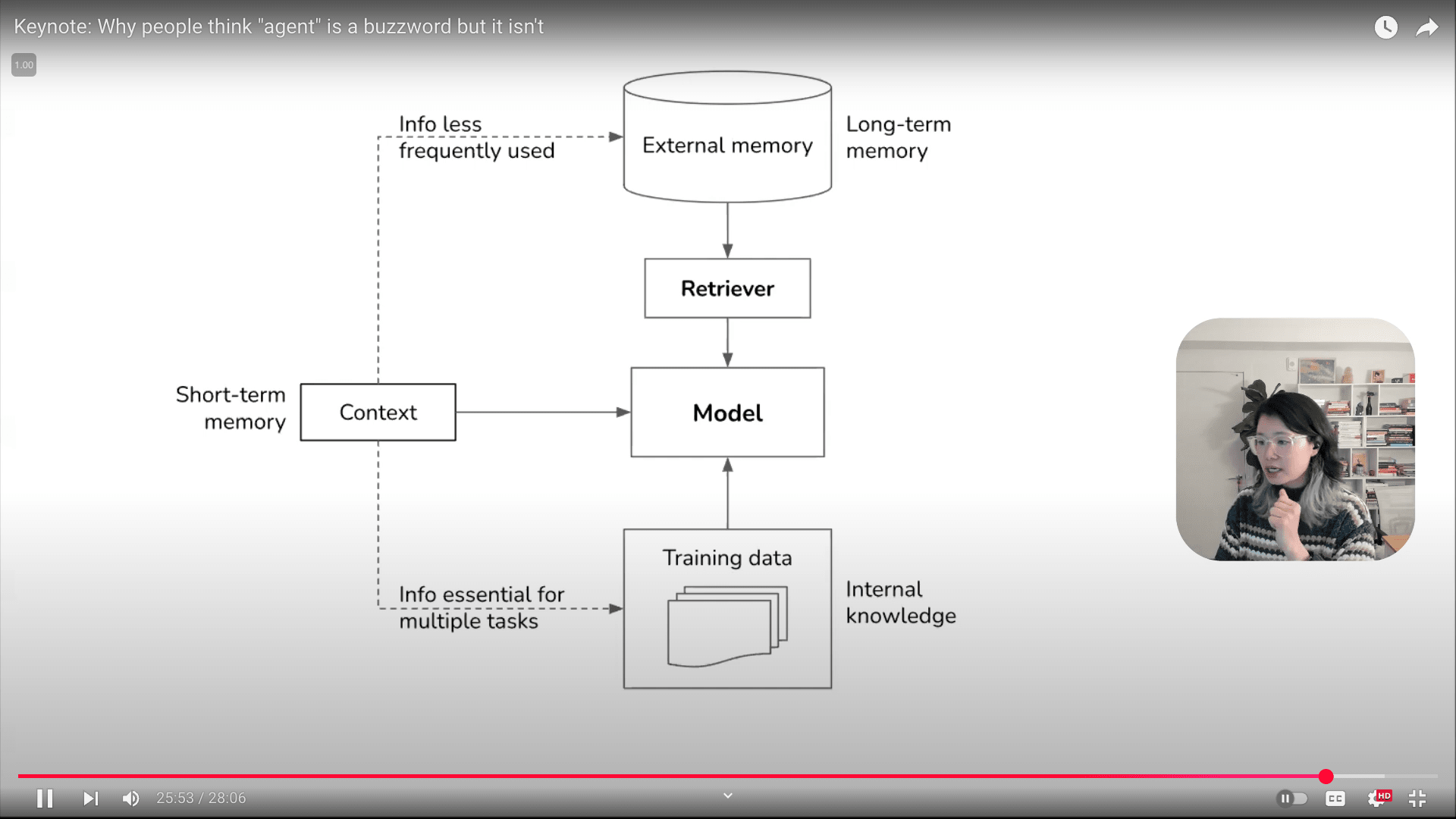

Context Management 📝

- Agents require a lots of context (tool documentation, outputs from previous steps, and reasoning)

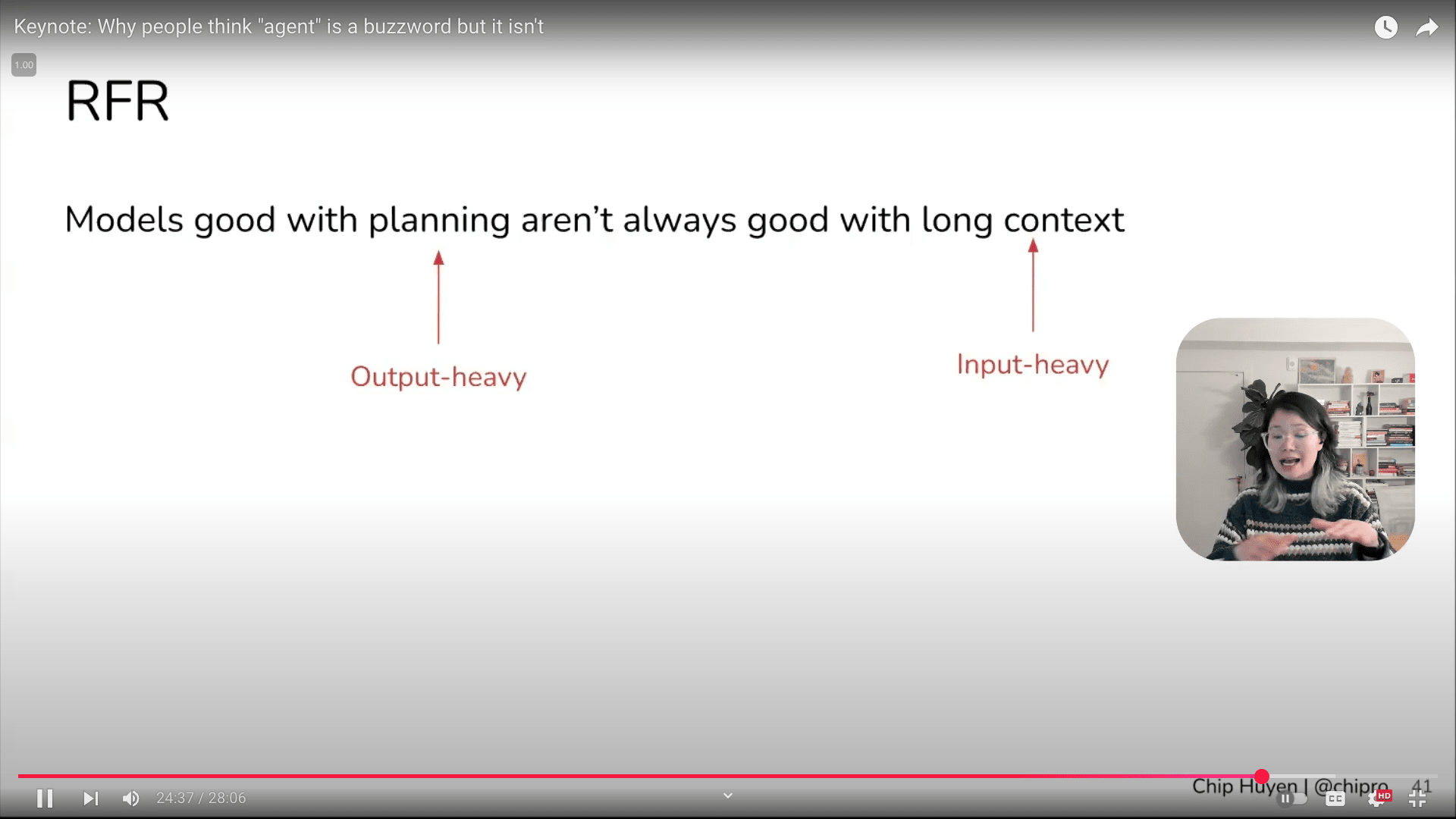

- Models good at planning aren’t necessarily good with long contexts

- Memory management is crucial

- Use short-term memory (context) for immediate task-relevant information.

- Supplement with long-term memory (external databases or storage) for less immediate information.

- Incorporate essential information into the model’s internal knowledge through fine-tuning

I recently started reading AI Engineer book by Chip Huyen and it’s pretty good so far. I highly recommend you to check that out, if you plan on getting into AI Engineer and build a better foundation 🙌

Happy Building Agents!